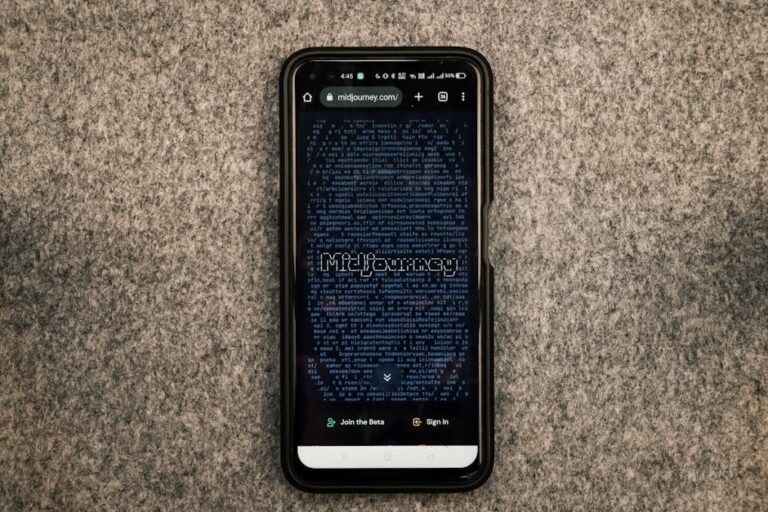

The world is overwhelmingly visual. From navigating city streets and reading product labels to recognizing a friend’s face across a room, daily life is built upon a constant stream of visual information. For the millions of people in the blind and low vision community, accessing this information has traditionally required a combination of assistive tools, human assistance, and learned techniques. However, a profound shift is underway, driven by the convergence of artificial intelligence and the ubiquitous power of the smartphone. The latest developments in AI for Accessibility Devices News are not just incremental improvements; they represent a paradigm shift, turning the camera in your pocket into a powerful seeing companion that can interpret and narrate the visual world in real-time. This technological leap is democratizing independence, breaking down long-standing barriers, and fostering a more inclusive society. As these sophisticated AI tools become more widely available across different mobile operating systems, their impact is set to grow exponentially, heralding a new era of empowerment for users worldwide.

The AI-Powered Eye: Deconstructing Modern Visual Accessibility Apps

At the heart of this revolution are mobile applications that leverage a sophisticated suite of AI technologies to perceive and understand the world through a smartphone’s camera. These are not simple camera apps; they are complex computational systems designed to function as an extension of the user’s senses. Understanding their inner workings reveals the sheer power of modern AI and its potential for real-world assistance.

The Core Technology Stack

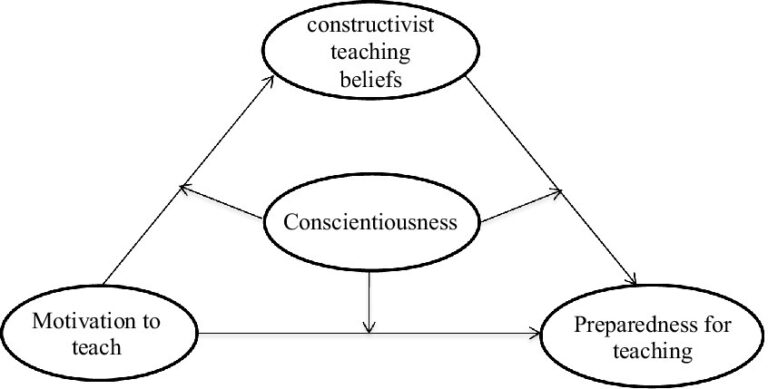

The magic of these “seeing” apps is built on several key AI pillars. The latest AI Phone & Mobile Devices News highlights how these technologies are being integrated directly into our daily lives. The primary engine is Computer Vision, a field of AI that trains machines to interpret and understand information from digital images and videos. This is paired with several specialized sub-disciplines:

- Optical Character Recognition (OCR): This technology is fundamental for reading. Advanced OCR engines can instantly recognize and read aloud everything from a street sign to a page in a book or a restaurant menu. Modern implementations can handle various fonts, lighting conditions, and even slight print imperfections.

- Image Recognition and Object Detection: This allows the app to identify everyday objects, from a chair in a room to a can of soup on a shelf. More advanced systems use vast datasets to recognize thousands of objects, brands, and even logos, providing crucial context for navigation and daily tasks.

- Facial Recognition: With user consent, these apps can be trained to recognize the faces of friends, family, and colleagues. When a familiar person enters the camera’s view, the app can announce their name, and some can even estimate their distance and emotional expression, adding a rich social layer to interactions.

- Scene Description: This is perhaps the most computationally intensive feature. Using generative AI models similar to those that create image captions, the app can provide an audible summary of an entire scene. It might say, “A person is sitting at a wooden desk with a laptop and a coffee mug,” offering a holistic understanding of the user’s surroundings. This area of development is a hot topic in AI-enabled Cameras & Vision News.

A critical aspect of this technology is the rise of on-device processing, a key theme in AI Edge Devices News. By running AI models directly on the smartphone, apps can provide instant feedback without needing an internet connection, which is vital for tasks like reading a sign quickly while walking. This also enhances user privacy, as sensitive data like images of people or personal documents do not need to be sent to the cloud.

A Comparative Look at the AI Accessibility Landscape

While mobile apps are leading the charge in accessibility, they are part of a broader ecosystem of assistive technology. The landscape includes everything from dedicated hardware to hybrid solutions that blend AI with human intelligence. Understanding this spectrum is key to appreciating where the technology is and where it’s headed.

Hardware vs. Software: The Accessibility Toolkit

The conversation around visual aids is no longer limited to canes and guide dogs. Today’s Wearables News is filled with innovations in this space. On one end of the spectrum, we have dedicated hardware solutions. These often take the form of smart glasses or wearable cameras. Devices like the OrCam MyEye or Envision Glasses clip onto a user’s existing eyewear and provide a hands-free experience. Their primary advantage is their discrete, always-on nature. A user can simply point their head at what they want to read or identify. This is a focal point of Smart Glasses News, where the goal is to seamlessly integrate digital information with the physical world.

On the other end is the software-only approach: the smartphone app. The key advantage here is accessibility and cost. Most people already own a powerful smartphone, making the barrier to entry incredibly low. These apps consolidate numerous tools—a document scanner, a barcode reader, a color identifier—into a single, free or low-cost application. The primary drawback is that the user must hold and point their phone, which can be cumbersome. However, the rapid pace of development in the mobile space means these apps often receive new features and performance updates more frequently than hardware-based solutions.

The Human-in-the-Loop Model and the AI Future

An interesting hybrid model is seen in services like Be My Eyes, which started by connecting blind users with sighted volunteers via live video chat. Recently, they have integrated powerful generative AI to provide an automated “Virtual Volunteer” option. This showcases a powerful trend: AI is not necessarily replacing human help but augmenting it, providing an instant, 24/7 option for quick tasks while keeping the human connection available for more complex or nuanced situations. This synergy between human and machine intelligence is a recurring theme in AI Assistants News and is proving to be a highly effective model for accessibility.

Ultimately, the choice between hardware and software often comes down to personal preference, specific use cases, and cost. Many users employ a combination of tools, using a smartphone app for detailed document reading at home and wearable tech for hands-free navigation while out and about. This multi-tool approach is becoming the new standard for a tech-empowered, independent lifestyle.

Real-World Impact and the Convergence of AI Ecosystems

The theoretical capabilities of these AI tools are impressive, but their true value is measured in the daily moments of independence they create. The technology is not just a novelty; it is a functional utility that integrates into every facet of life, from education and work to social interaction and home management.

Case Studies in Independence

Consider these real-world scenarios where AI accessibility apps are game-changers:

- The Grocery Store: A user with low vision needs to find a specific brand of gluten-free pasta. Instead of asking for help, they slowly scan the shelves with their phone. The app’s barcode scanner and OCR function work in tandem. It announces product names as they come into view, and upon finding the right box, it can read the nutritional information and cooking instructions directly from the packaging. This scenario touches upon trends in AI Kitchen Gadgets News, where identifying food items is a key function.

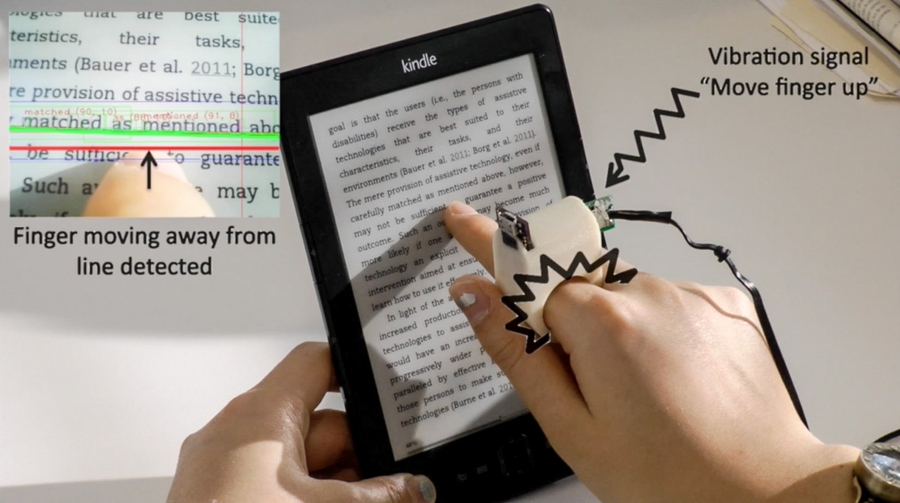

- The University Campus: A blind student receives a printed handout in class. In the past, they would have needed to wait for a digital version or have someone read it to them. Now, they can use their phone’s document scanner. The app guides them to position the camera correctly, captures the image, and reads the entire multi-page document back to them in minutes. This is a powerful application highlighted in AI Education Gadgets News.

- The Social Gathering: At a noisy café, a user wants to know if their friends have arrived. They can pan their phone across the room. The app, having been trained on their friends’ faces, discreetly announces, “Sarah and Tom are sitting at a table about 10 feet in front of you,” transforming a potentially anxious experience into a confident one.

Integration with the Broader Smart Ecosystem

The power of these accessibility tools is amplified when they connect with other AI-driven systems. The future of accessibility is not isolated but integrated. For instance, a user could ask their voice assistant, “Hey, can you tell me who is at the front door?” The assistant would then activate the camera on their AI Security Gadgets News-featured smart doorbell, run the image through a recognition model, and reply, “It looks like the delivery driver.” This seamless integration between AI Assistants News and Smart Home AI News creates a more aware and accessible living environment.

This convergence extends to other areas as well. Imagine an AI-powered app describing the visual elements of a video game, making it more accessible, a topic relevant to AI in Gaming Gadgets News. Or consider its use in healthcare, where an app could help a user identify their medication by reading the tiny print on a prescription bottle, a crucial intersection with Health & BioAI Gadgets News. The potential applications are vast and demonstrate that accessibility is becoming a foundational component of the entire AI and IoT ecosystem.

Best Practices, Challenges, and the Road Ahead

While the progress in AI-powered visual accessibility is undeniable, the technology is not without its challenges and limitations. Achieving its full potential requires a collaborative effort between developers, users, and the wider tech community.

Tips and Considerations for Users and Developers

For users looking to adopt these tools, a few best practices can significantly improve the experience. Good lighting is crucial for OCR and object recognition accuracy. Learning to hold the phone steady and at the correct distance from a target takes practice. Exploring all the app’s features, often called “channels” or “modes,” is key to unlocking its full utility.

For developers, the mantra must be “design with, not for.” Engaging the blind and low vision community throughout the development process is non-negotiable. This ensures the features are genuinely useful and the user interface is intuitive for non-visual navigation. Furthermore, developers must prioritize:

- Offline Functionality: Core features like text and barcode reading must work flawlessly without an internet connection.

- Speed and Efficiency: The time between pointing the camera and receiving audible feedback needs to be as close to instantaneous as possible.

- Discretion: The app’s audio feedback should be manageable, perhaps integrating well with bone-conduction headphones to allow the user to hear both the app and their environment.

Current Limitations and Future Horizons

The primary pitfall of current technology is its fallibility. AI models can still make mistakes, especially in cluttered environments, with unusual fonts, or in poor lighting. Misidentifying an object or misreading a crucial word on a warning label can have real consequences. Therefore, these tools should be considered powerful aids, not infallible replacements for traditional mobility tools and user judgment.

Looking forward, the future is incredibly bright. We can expect to see more sophisticated scene understanding, where the AI can infer context and intent. For example, instead of just saying “A person and a car,” it might say, “A person is waiting to cross the street as a car approaches.” The integration with AR/VR AI Gadgets News could lead to augmented reality glasses that provide real-time audio labels for objects in a user’s field of view. The long-term vision, explored in Neural Interfaces News, might even involve directly feeding visual information to the brain, though this remains in the realm of AI Research / Prototypes News for now. The continuous improvement in AI models and mobile hardware ensures that the capabilities of these life-changing applications will only continue to grow.

Conclusion: A Clearer Vision for the Future

The proliferation of AI-powered visual accessibility apps across all major mobile platforms marks a watershed moment for assistive technology. By transforming the smartphone into an intelligent “seeing” device, this technology is providing an unprecedented level of independence, information access, and social inclusion for the blind and low vision community. While not a perfect substitute for human sight, these tools are profoundly changing lives by making the visual world more navigable and understandable. They represent the very best of what technology can achieve: not just creating efficiency or entertainment, but fundamentally empowering people and dismantling barriers. As AI continues to evolve, the future of accessibility is not just bright—it’s becoming clearer every day.