The landscape of artificial intelligence is undergoing a seismic shift. For years, the immense computational power required for advanced AI, particularly generative models, has tethered these capabilities to the cloud. This paradigm, however, is being fundamentally challenged by a new wave of silicon innovation: purpose-built chips designed to run generative AI models directly on edge devices. This evolution marks a pivotal moment, moving intelligence from centralized data centers to the myriad of devices we interact with daily—from our cameras and cars to our home assistants and wearables. The implications are profound, promising a future of AI that is faster, more private, more reliable, and deeply integrated into the fabric of our physical world. This isn’t merely an incremental upgrade; it’s the dawn of a new era for ubiquitous, responsive, and truly personal artificial intelligence, a key topic in the latest AI Edge Devices News.

The Generative Leap: Redefining Edge AI Capabilities

For the better part of a decade, AI at the edge has primarily focused on “inferencing” tasks. This involves using a pre-trained model to analyze data and make a prediction or classification. Think of a smart security camera identifying a person versus a pet, or a smartphone unlocking with facial recognition. These are powerful but fundamentally reactive capabilities. The latest developments, however, are pushing the boundary from simple inference to on-device generation, a cornerstone of modern AI innovation.

From Inference to Creation: The Old vs. The New

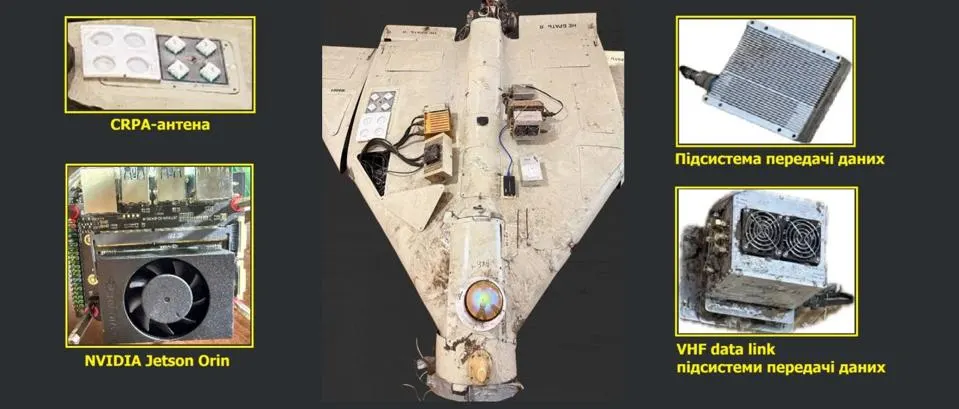

The traditional edge AI chip was optimized for running models like MobileNet for image classification or YOLO for object detection. These tasks are computationally intensive but follow a relatively linear path of data analysis. Generative AI, which powers applications like large language models (LLMs) or image diffusion models, is an entirely different beast. It requires not just analyzing data but creating new, coherent data from scratch. This could mean a smart speaker engaging in a natural, multi-turn conversation without needing to send every query to the cloud, or an AI-enabled Cameras & Vision News topic where a drone can generate a real-time summary of the landscape it’s surveying. This leap from reactive analysis to proactive creation is what defines the new generation of edge AI hardware.

Why Now? The Confluence of Hardware, Software, and Demand

This transition is being driven by a perfect storm of technological advancements and market needs. Firstly, hardware architects have developed novel chip designs, often centered around powerful Neural Processing Units (NPUs), that are specifically structured to handle the complex, iterative workloads of generative models. Secondly, significant progress in software, particularly in model quantization and pruning, allows massive models like LLaMA or Stable Diffusion to be compressed into smaller, more efficient versions that can run on resource-constrained hardware. Finally, there is a surging demand for applications that offer low latency, enhanced privacy, and offline functionality—three key advantages that on-device processing provides over cloud-dependent solutions. This convergence is fueling the latest AI Phone & Mobile Devices News and pushing innovation across the board.

Key Performance Indicators: What Defines a GenAI Edge Chip?

When evaluating these new chips, the metrics have evolved. While raw performance, measured in Trillions of Operations Per Second (TOPS), is still important, it’s no longer the only factor. The critical metric is now performance-per-watt (TOPS/Watt), as most edge devices are battery-powered or have strict thermal limits. Other key specifications include memory bandwidth, which is crucial for feeding the processing cores with data fast enough, and support for advanced data types and quantization techniques (like INT4 or INT8) that are essential for running compressed generative models efficiently.

Under the Hood: The Architecture of Next-Gen Edge AI Accelerators

To enable the complex dance of generative AI on a tiny, power-sipping chip requires a fundamental rethinking of processor architecture. These new accelerators are not simply more powerful versions of their predecessors; they are specialized engines built from the ground up for the unique demands of models that create, reason, and interact in sophisticated ways.

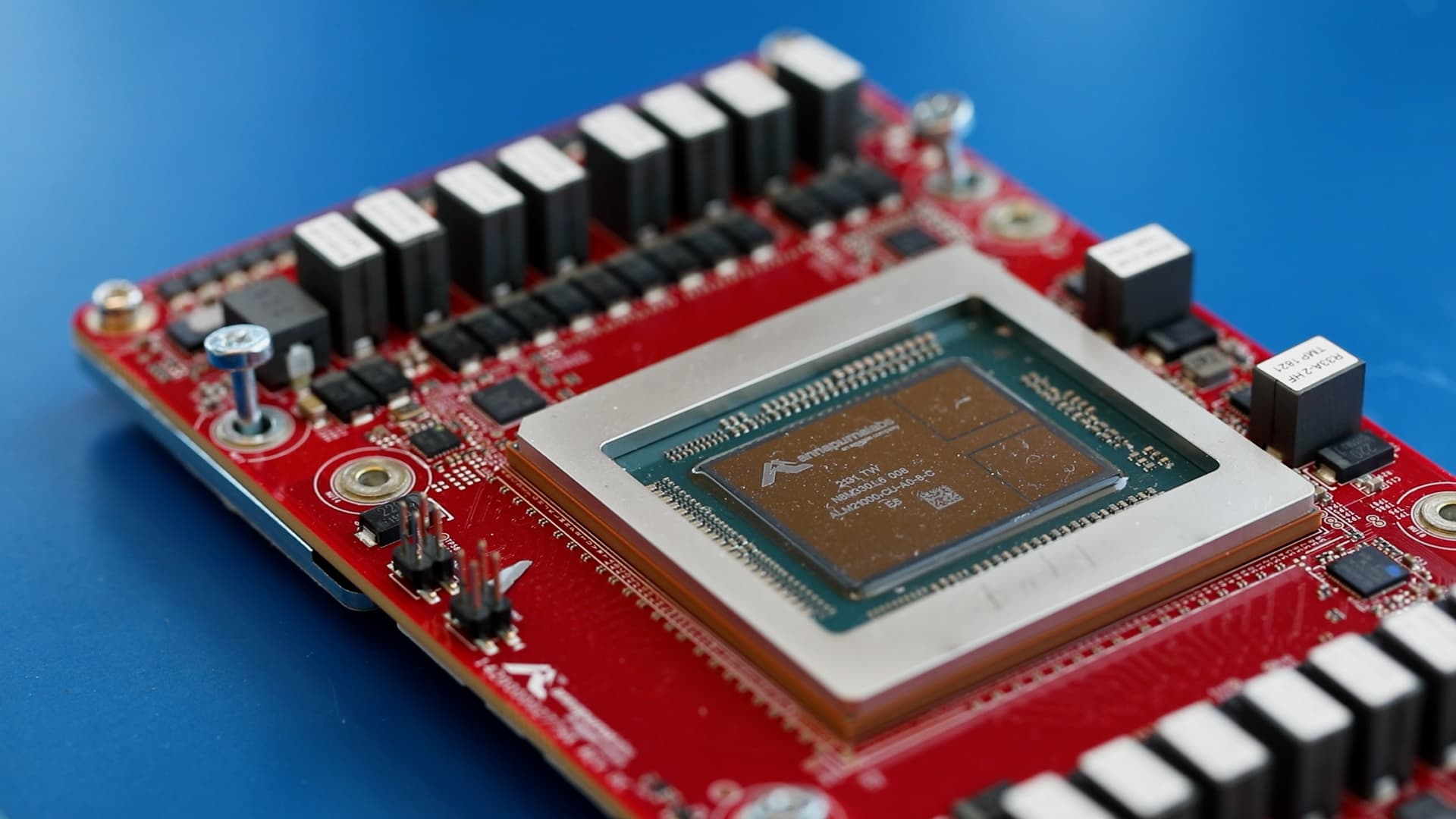

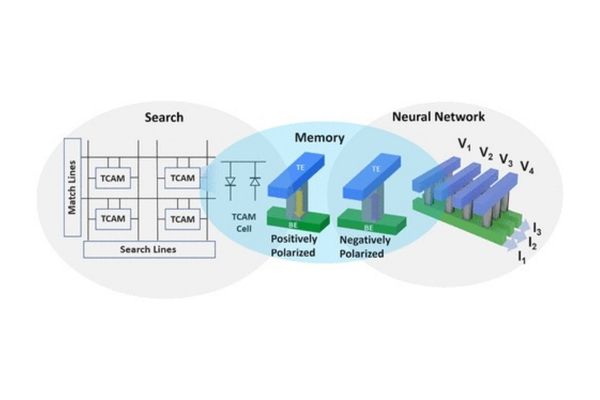

The Central Role of the Neural Processing Unit (NPU)

At the heart of every modern AI edge chip is the NPU. Unlike a general-purpose CPU or a graphics-focused GPU, an NPU is an array of processing cores designed specifically for the mathematical operations that underpin neural networks, such as matrix multiplications and convolutions. For generative AI, these NPUs are enhanced to handle the massive parallelism and sequential data flows required by transformer-based architectures, which are the foundation of most modern LLMs and vision models. They are built to process data in massive, parallel streams, dramatically accelerating the token-by-token generation process in a language model or the denoising steps in an image diffusion model. This is a critical area of focus in AI Research / Prototypes News.

Memory, Bandwidth, and the Bottleneck Problem

A powerful NPU is useless if it’s starved for data. Generative models have enormous appetites for memory, both for storing the model’s weights and for holding the intermediate calculations (the “attention cache” in transformers, for example). The biggest challenge for chip designers is overcoming the “memory wall”—the bottleneck created by the speed at which data can be moved from memory to the processing cores. Next-gen edge chips address this with high-bandwidth on-chip memory (SRAM) and advanced memory management techniques. They are designed to keep the most frequently accessed data as close to the NPU as possible, minimizing the latency and power consumption associated with fetching data from slower, off-chip DRAM.

Power Efficiency: The Unsung Hero of Edge GenAI

Perhaps the most critical design constraint for edge devices is power. A drone, a pair of smart glasses, or a health-monitoring wearable cannot be tethered to a power outlet. Achieving high TOPS is one thing; achieving it within a power envelope of just a few watts is the true engineering marvel. This is where architectural innovation shines. Techniques like clock gating (turning off parts of the chip when not in use), dataflow optimization (scheduling operations to minimize data movement), and aggressive model quantization all contribute to maximizing the TOPS/Watt metric. This relentless focus on efficiency is what makes it possible to run a sophisticated AI assistant on a battery-powered device, a key theme in Wearables News and AI Companion Devices News.

Real-World Impact: Unleashing GenAI Across a Spectrum of Devices

The theoretical capabilities of these new chips translate into tangible, transformative applications across nearly every industry and aspect of daily life. By decentralizing generative AI, we are unlocking a new tier of intelligent, autonomous, and personalized experiences.

Smarter Homes and Personalized Assistants

In the home, the impact will be immediate. Imagine an AI assistant that doesn’t need an internet connection to understand complex commands, control your smart devices, or even summarize the day’s events from your connected calendars and emails—all with guaranteed privacy. This is a major topic in Smart Home AI News and AI Assistants News. Your Robotics Vacuum News feed might soon feature devices that can understand verbal instructions like, “Clean around the kitchen table but avoid the area where the kids are playing.” Likewise, AI Kitchen Gadgets News will see smart ovens that can suggest recipes based on the ingredients they see via an internal camera.

The Future of Vision and Perception

For devices that see the world, the possibilities are even more profound. The latest AI Cameras News points to security systems that don’t just detect motion but can provide a natural language summary of events (“A delivery person in a blue shirt dropped off a package at 2:15 PM”). In the realm of Drones & AI News, an agricultural drone could generate a real-time report on crop health, highlighting specific areas of concern in plain English. For Autonomous Vehicles News, on-device GenAI can enable more sophisticated predictive reasoning, allowing a car to better anticipate the actions of pedestrians and other drivers, enhancing safety and navigation without relying on a constant cloud connection.

Revolutionizing Personal Tech and Wellness

Personal devices will become true companions. The focus of Health & BioAI Gadgets News is shifting towards devices that can provide real-time, personalized coaching. An AI Fitness Devices News highlight could be a wearable that not only tracks your run but uses generative AI to offer dynamic feedback on your form and pacing. Smart Glasses News and AR/VR AI Gadgets News will feature devices that can provide real-time translation or contextually relevant information about your surroundings, generated instantly on the device itself. This extends to accessibility, where AI for Accessibility Devices News reports on gadgets that can describe a scene to a visually impaired user in rich, narrative detail.

The New Creative and Productive Frontier

Generative AI at the edge will also empower professionals and creators. AI Tools for Creators News will be filled with smart cameras that can suggest better shot compositions or automatically generate edited clips. In the workplace, AI Office Devices News will cover smart whiteboards that can transcribe a brainstorming session and then generate a summary, action items, and a project plan, all locally and securely.

Navigating the New Landscape: Best Practices and Key Considerations

While the potential of on-device generative AI is immense, realizing it requires careful planning and a deep understanding of the unique challenges and opportunities this new paradigm presents. Both developers and businesses must adapt their strategies to harness its full power.

For Developers: Model Optimization is Non-Negotiable

The mantra for edge AI development is “optimize, optimize, optimize.” Developers cannot simply take a massive, cloud-based model and expect it to run on an edge device.

- Quantization: This is the process of reducing the precision of the model’s weights (e.g., from 32-bit floating-point to 8-bit or 4-bit integers). It dramatically reduces memory footprint and can significantly speed up computation on compatible NPUs.

- Pruning: This technique involves identifying and removing redundant or unimportant connections within the neural network, further shrinking the model size with minimal impact on accuracy.

- Knowledge Distillation: This involves training a smaller, “student” model to mimic the behavior of a larger, more powerful “teacher” model, effectively transferring its capabilities into a more compact form.

Mastering these techniques is essential for deploying effective GenAI applications at the edge.

For Businesses: Balancing Cost, Performance, and Privacy

For organizations looking to integrate this technology, the decision is not just about technical feasibility but also about strategy.

- Hardware Selection: The market for AI edge chips is becoming increasingly competitive and fragmented. Choosing the right System-on-Chip (SoC) involves a trade-off between performance (TOPS), power consumption (Watts), and cost.

- Data Strategy: On-device processing offers a powerful privacy advantage. Businesses can market this as a key feature, building trust with consumers who are increasingly wary of their data being sent to the cloud.

- Hybrid Approach: Not everything needs to run on the edge. The most effective solutions will likely use a hybrid model, where real-time, low-latency tasks are handled on-device, while larger, less time-sensitive tasks (like model retraining) are offloaded to the cloud.

Common Pitfalls to Avoid

A common mistake is underestimating the complexity of the software stack. A powerful chip is only as good as the drivers, compilers, and development tools that support it. Another pitfall is focusing solely on model accuracy in a vacuum. For an edge device, a model that is 95% accurate and runs in 100 milliseconds is often far more valuable than a 98% accurate model that takes two seconds to run and drains the battery.

Conclusion: A New Era of Ubiquitous Intelligence

The emergence of generative AI-capable edge chips represents more than just an evolution in processing power; it signals a fundamental shift in how we will interact with technology. By moving AI from distant data centers into the devices that surround us, we are breaking down the barriers of latency, privacy, and connectivity. This decentralization will foster a new generation of applications that are more responsive, personal, and reliable. From AI Personal Robots that can learn and adapt to our homes, to AI Security Gadgets that provide intelligent, private monitoring, the possibilities are boundless. The journey ahead will involve overcoming significant technical challenges, but the destination is clear: a future where powerful, creative, and helpful AI is not just a service we connect to, but an integrated and seamless part of our everyday lives.