The Intelligence Within: A Paradigm Shift in AI and Sensor Technology

For decades, the path to creating smarter, more capable machines seemed straightforward: build better sensors. The prevailing wisdom held that to grant a robot human-like dexterity or an IoT device uncanny awareness, we needed to equip it with hardware that could see, hear, and feel the world with ever-increasing fidelity. This hardware-centric race has given us breathtaking technology, from high-resolution LiDAR in autonomous vehicles to bio-mimetic tactile sensors for robotic hands. Yet, a new and profound paradigm is emerging from the world of artificial intelligence, challenging this long-held assumption. A growing body of research and real-world application suggests that the sophistication of the AI model—and, crucially, the *way* it is taught—can be far more impactful than the raw quality of the sensor data it receives. This article explores the monumental shift from a hardware-first to an intelligence-first approach. We will delve into how curated AI learning experiences are enabling machines to achieve remarkable feats with simpler, more accessible hardware, unlocking a new wave of innovation across the entire spectrum of AI Sensors & IoT News, from robotics to the smart home.

Section 1: The Old Guard vs. The New Wave: Hardware Fidelity vs. Algorithmic Insight

The evolution of intelligent systems has long been a story of co-development between sensing hardware and processing software. However, the emphasis has historically been skewed towards the former, creating a distinct approach that is now being challenged by the power of modern AI.

The Hardware-Centric Approach: A Race for Higher Fidelity

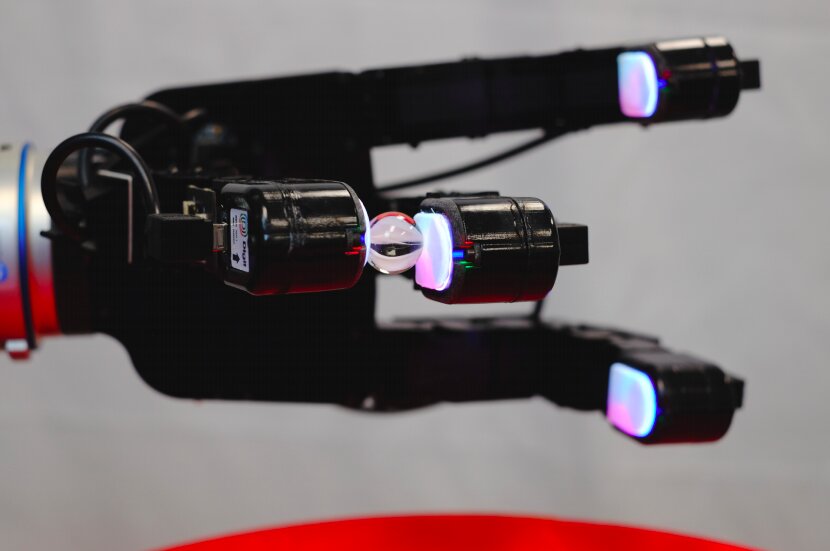

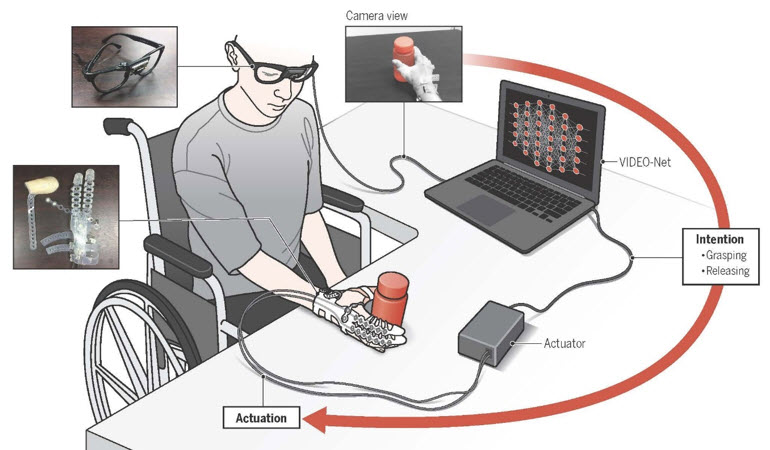

The traditional model for advancing robotics and IoT has been fundamentally driven by a quest for better physical sensors. The logic is intuitive: if a machine can perceive the world with more detail and accuracy, it can make better decisions. This philosophy has fueled incredible advancements. In the realm of AI-enabled Cameras & Vision News, it led to the push for higher resolutions, greater dynamic range, and faster frame rates. For Autonomous Vehicles News, it manifested as a race to develop LiDAR systems that could generate denser point clouds, seeing further and with more precision. In advanced robotics, engineers have poured resources into developing tactile sensors that mimic the complex network of nerves in a human fingertip, aiming to give machines a nuanced sense of touch for delicate manipulation tasks. This approach treats the sensor as the primary gateway to understanding, where the goal is to feed the AI the cleanest, most detailed data possible. While undeniably effective for well-defined tasks in controlled environments, this hardware-first strategy has inherent limitations: high cost, physical fragility, and a potential brittleness when faced with novel situations not perfectly captured by its high-fidelity senses.

The Emerging Intelligence-First Paradigm

The new paradigm flips the script. It posits that the “brain” (the AI model) is often more important than the “nerve endings” (the sensors). This approach champions the idea that an advanced AI, trained through a meticulously designed curriculum, can learn to infer complex environmental states and properties from simpler, noisier, or lower-fidelity sensor data. It’s less about capturing a perfect snapshot of reality and more about building a robust internal model of how the world works. A powerful analogy is a master chef. A chef doesn’t need a mass spectrometer to know when a steak is perfectly cooked; they rely on a combination of simpler senses—the sizzle’s sound, the color of the crust, the firmness to the touch—all interpreted through the lens of thousands of hours of experience. This “experience” is what the intelligence-first paradigm seeks to give to machines, not through better sensors, but through better training. As seen in recent Robotics News, a robot can learn to detect object slip not from a hyper-sensitive tactile sensor, but by learning the subtle visual and motor-current cues that precede a fall, an insight gained through millions of simulated trials.

Section 2: The Engine of Understanding: Curriculum Learning and Simulated Experience

The “magic” behind the intelligence-first paradigm isn’t magic at all; it’s a combination of sophisticated training methodologies and the immense scale of virtual environments. These tools allow AI models to build the deep, intuitive understanding necessary to operate effectively with imperfect data.

Building Intuition with Curriculum Learning

At the heart of this new approach is a machine learning strategy called “curriculum learning.” Inspired by how humans learn, this technique involves training an AI model on a sequence of tasks, starting with very simple examples and gradually increasing the difficulty and complexity. Instead of bombarding a model with random, complex data from the start, a curriculum guides it, allowing it to build foundational knowledge first. For a robotic arm, this might mean:

- Phase 1: Learn to simply make contact with large, stationary objects.

- Phase 2: Learn to grasp these objects securely.

- Phase 3: Progress to grasping smaller, irregularly shaped objects.

- Phase 4: Introduce challenges like slippery surfaces, varied weights, and moving targets.

By mastering each stage, the AI develops a more robust and generalizable understanding of physics, momentum, and friction. This structured learning process makes the model incredibly efficient, enabling it to extract meaningful patterns from even sparse sensor data because it has a strong predictive model of what *should* happen next. This is a recurring theme in AI Research / Prototypes News, where the training regimen is often as innovative as the model architecture itself.

The Power of Simulation: Gaining a Million Years of Experience

Curriculum learning is supercharged by modern physics simulators. Platforms like NVIDIA’s Isaac Sim or DeepMind’s MuJoCo create photorealistic, physically accurate virtual worlds where robots can train 24/7. In these digital sandboxes, an AI can attempt a task millions or even billions of times, an impossible feat in the real world. It can experience a vast array of scenarios, object types, and lighting conditions, and it can fail safely and learn from every mistake. This massive repository of simulated experience is what allows an AI to become the “master chef.” It learns to connect the dots between different sensory inputs—a slight shadow change from a camera, a minor spike in motor torque, a change in acoustic feedback—to form a holistic understanding of an event, like an object beginning to slip. This reduces the dependency on a single, high-fidelity sensor to explicitly report “slip detected.” The AI already knows it’s coming, a capability that is transforming fields from AI Personal Robots News to industrial automation.

Section 3: Widespread Impact Across the AI and IoT Ecosystem

The principle of prioritizing learning over raw sensor fidelity is not confined to robotics labs. Its implications are reshaping product design and capability across the entire landscape of consumer and industrial technology, making devices smarter, cheaper, and more accessible.

Democratizing Intelligence in IoT and Smart Devices

This paradigm shift is a powerful democratizing force. By relying on smarter software, manufacturers can build highly capable devices using more affordable, off-the-shelf hardware.

- Smart Home AI News: An advanced smart thermostat doesn’t need a complex array of temperature and humidity sensors in every corner of a house. Instead, by learning a household’s daily routines, observing how long the HVAC system runs to achieve a set temperature, and correlating it with a single thermostat reading, it can build a sophisticated thermal model of the home and operate more efficiently.

- Health & BioAI Gadgets News: The modern smartwatch is a prime example. It uses relatively simple sensors like an accelerometer and a photoplethysmography (PPG) sensor. Yet, through powerful AI algorithms trained on vast medical datasets, it can detect complex conditions like atrial fibrillation or sleep apnea—insights that are impossible to derive from the raw sensor data alone. This trend is central to the latest in Wearables News and AI Fitness Devices News.

- AI Edge Devices News: The intelligence-first approach is critical for edge computing. Simpler sensors generate less data, which requires less power and computational overhead to process. This allows for powerful AI capabilities to run directly on small, battery-powered devices, from AI Security Gadgets that can recognize a threat without the cloud, to AI Pet Tech that learns an animal’s behavior locally.

Redefining the Landscape for Complex Systems

For more complex systems, this shift is not just about cost savings; it’s about achieving new levels of performance and reliability.

- Autonomous Vehicles News: The debate between vision-first and LiDAR-heavy approaches in self-driving cars is a real-world manifestation of this principle. While many rely on expensive, high-fidelity LiDAR, companies like Tesla have championed the idea that an extremely powerful AI, trained on an enormous dataset of real-world driving, can interpret complex 3D scenes primarily from 2D camera feeds. This software-centric approach aims to solve the problem with intelligence rather than just better hardware.

- Drones & AI News: Modern drones can perform complex acrobatic maneuvers and navigate cluttered environments using simple IMUs and cameras. Their stability and autonomy come from sophisticated control algorithms and AI models that can predict and adapt to aerodynamic forces in real-time, a feat that would be impossible with sensors alone. This same principle applies to emerging AR/VR AI Gadgets, which rely on clever AI to create stable, immersive experiences from the noisy data of onboard motion sensors.

Section 4: Finding the Balance: Recommendations and Future Directions

While the intelligence-first paradigm is transformative, it is not a declaration that hardware is irrelevant. The future lies not in choosing one over the other, but in finding the optimal synergy between them and pushing the boundaries of what integrated systems can achieve.

Best Practice: Co-Design of Hardware and Software

The most successful approach is not to simply use cheaper sensors, but to engage in a holistic co-design process. This involves engineers and data scientists working together from the outset to answer critical questions:

- What is the minimum sensory information the AI needs to solve this problem effectively?

- Can we use a simpler, more robust sensor if we invest more in the training data and model architecture?

- How can the hardware be designed to provide data that is most “informative” for a learning algorithm, even if it’s not the highest fidelity?

For instance, a Robotics Vacuum might be designed with simpler navigation sensors, but with a motor that provides rich feedback on wheel slippage and current draw, giving the navigation AI a secondary data source to better understand floor textures and obstacles.

The Future: Sensor Fusion and Abstract Reasoning

The next frontier is in developing AI that excels at sensor fusion—the art of combining data from multiple, disparate, and simple sensors to create an understanding that is far greater than the sum of its parts. An AI Monitoring Device for smart infrastructure might combine acoustic data from a microphone, vibration data from an accelerometer, and temperature data from a thermistor. A sudden change in vibration correlated with a specific sound could indicate a mechanical failure that no single sensor could reliably detect on its own. The role of ubiquitous connectivity like 5G becomes critical here, enabling real-time communication between distributed sensors and the AI models—whether on the edge or in the cloud—that perform this complex fusion and reasoning. This will further enhance everything from AI Audio / Speakers News, where devices understand context, to Smart City / Infrastructure AI Gadgets that predict maintenance needs before they arise.

Conclusion: The Dawn of an Intelligence-First Era

The narrative of progress in AI, robotics, and the IoT is undergoing a fundamental revision. While the pursuit of better sensor hardware will always have its place, the center of gravity has shifted decisively towards intelligence. The true revolution lies not in building machines that can sense the world perfectly, but in building machines that can understand it deeply, even with imperfect information. By focusing on sophisticated AI models, curated learning curriculums, and the vast potential of simulation, we are creating a new generation of devices that are more capable, adaptable, and accessible than ever before. The future will be defined not by the acuity of a device’s senses, but by the depth of its understanding—an understanding forged in data and refined through the powerful process of learning.