The Unseen Obstacle: Why Weather is a Critical Hurdle for Self-Driving Technology

The vision of a future dominated by autonomous vehicles is often painted in broad, sunny strokes: sleek, electric pods gliding seamlessly through urban landscapes, orchestrated by flawless artificial intelligence. This utopian image, heavily featured in marketing and tech demonstrations, represents the ultimate goal of a multi-trillion-dollar industry. However, as these advanced systems transition from controlled test tracks to the chaotic reality of public roads, they are encountering their most formidable and unpredictable adversary: Mother Nature. Recent events involving commercial robotaxi fleets encountering severe weather have brought this challenge into sharp focus. When torrential rain floods streets or a sudden blizzard blankets lane markings, the sophisticated sensor suites and decision-making algorithms that underpin self-driving cars are pushed to their absolute limits. This isn’t just a minor inconvenience; it’s a fundamental test of the technology’s safety, reliability, and readiness for widespread adoption. The latest developments in Autonomous Vehicles News highlight that conquering adverse weather is no longer a future problem but a present-day imperative for the entire industry.

Section 1: The Sensor Suite Under Siege: A Technical Breakdown

An autonomous vehicle’s ability to “see” and interpret the world is entirely dependent on its complex array of sensors. This perception system typically relies on a principle called sensor fusion, combining data from multiple sources to create a robust, 360-degree model of its environment. However, severe weather can systematically degrade the performance of each key sensor type, creating a cascade of challenges for the vehicle’s AI.

LiDAR’s Achilles’ Heel: Attenuation and Scattering

Light Detection and Ranging (LiDAR) is often considered the workhorse of AV perception. It works by emitting millions of laser pulses per second and measuring the time it takes for them to reflect off objects, creating a precise 3D point cloud of the surroundings. In clear conditions, its accuracy is unparalleled. However, in heavy rain, fog, or snow, these laser beams can be scattered or absorbed by airborne particles (water droplets, snowflakes). This phenomenon, known as attenuation, reduces the sensor’s effective range and can introduce significant “noise” into the point cloud, making it difficult for the AI to distinguish between a real obstacle and a phantom reading caused by a dense patch of fog. This challenge is a major topic in both AI-enabled Cameras & Vision News and the broader field of AI Sensors & IoT News, as engineers race to develop more resilient hardware and filtering algorithms.

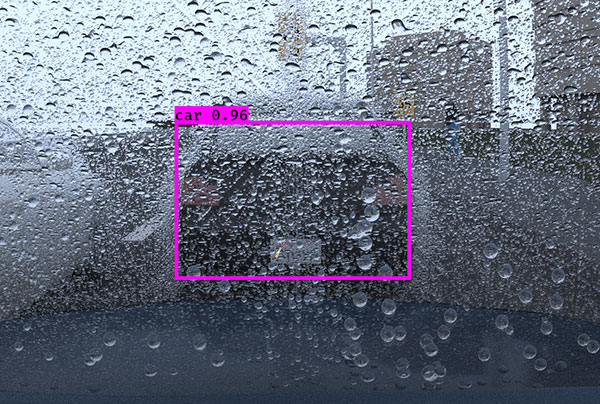

Cameras in the Crosshairs: Obscured Vision and Lost Cues

High-resolution cameras are essential for reading traffic signs, identifying traffic light colors, and interpreting the nuanced behavior of other road users. They are the primary sensor for understanding context. Yet, they are also the most susceptible to weather. Raindrops or mud on the lens can create distortions or completely block the view. Glare from a low sun reflecting off a wet road can blind the camera, while heavy snow can completely obscure vital lane markings and curbs. The AI’s machine learning models are trained on vast datasets, but if the input data from the camera is corrupted or incomplete due to weather, its ability to make safe decisions plummets. This is a critical area of research, connecting directly to advancements seen in AI Security Gadgets News, where clear imaging in all conditions is paramount.

Radar’s Resilience and Its Limitations

Radar (Radio Detection and Ranging) is the veteran of the sensor suite. It sends out radio waves and is largely unaffected by rain, fog, or snow, making it exceptionally reliable for detecting the presence and velocity of other vehicles. However, its primary weakness is its low resolution. Radar can confidently tell an AV that a large object is 100 meters ahead and moving at 50 mph, but it struggles to classify *what* that object is. Is it a car, a large piece of debris, or a pedestrian in a heavy coat? This ambiguity means that while radar provides a crucial layer of safety, it cannot be relied upon alone for complex navigation and decision-making, forcing a dependency on the more weather-sensitive LiDAR and camera systems.

Section 2: Beyond Perception: The Dynamic and Algorithmic Challenges

Even if a vehicle’s sensors could magically pierce through a storm, the challenges are far from over. The AI’s driving policy—the software that makes decisions about steering, acceleration, and braking—faces an entirely new set of problems when the physical environment changes dramatically.

Modeling Unpredictable Physics: Traction and Control

One of the most difficult tasks for an AV is to accurately model vehicle dynamics on compromised surfaces. The system needs to understand and predict the risk of hydroplaning when encountering standing water or the loss of traction associated with black ice. This requires more than just perception; it requires a sophisticated internal physics model that can adapt in real-time. While a human driver can “feel” the car beginning to slip, an AV must infer this from subtle changes in wheel speed and vehicle orientation. This complex predictive capability is a frontier in AI, with parallels in fields like AI Fitness Devices News, where sensors are used to model and predict human body movement.

The “Minimum Risk Condition” Dilemma

Every autonomous system is programmed with a fallback protocol known as a “Minimum Risk Condition” (MRC). When the vehicle determines it can no longer operate safely—perhaps because its sensors are too degraded or it encounters a situation beyond its programming—it is designed to execute an MRC, which typically involves pulling over to the side of the road and stopping. This is a safety-critical feature. However, a severe storm presents a profound dilemma: what if the “safe” place to pull over is itself a hazard? Pulling onto a road shoulder during a flash flood could mean stopping in several inches of standing water, potentially damaging the vehicle’s electronics or stranding it. The AI must be able to assess the safety of its fallback maneuver, a complex ethical and logistical problem that developers are actively working to solve. This is a central theme in current Autonomous Vehicles News and discussions around deployment safety.

Navigating Erratic Human Behavior

A final, crucial challenge is that bad weather makes human drivers less predictable. People may brake suddenly, swerve to avoid puddles, or drive with reduced visibility. The AI’s predictive models, largely trained on data from clear-weather driving, must adapt to this increased randomness. The vehicle needs to increase its following distance and operate with a higher degree of caution, anticipating the erratic behavior of the human-driven cars around it. This intersection of machine intelligence and human psychology is a fascinating area of study, relevant even in fields like AI Companion Devices News, which also seek to understand and predict human intent.

Section 3: Engineering the Solution: A Multi-Pronged Approach

The autonomous vehicle industry is not standing still in the face of these challenges. A combination of hardware innovation, software advancements, and strategic operational changes are being deployed to weather-proof the future of transportation.

Hardware Fortification and Innovation

On the hardware front, companies are developing “all-weather” sensor suites. This includes integrated heating elements to melt ice and snow off sensors, and miniature, high-pressure washer and wiper systems to keep camera and LiDAR lenses clear. Furthermore, a new wave of sensor technology is being explored. Thermal cameras, for instance, can detect the heat signatures of pedestrians and other vehicles, making them effective in fog or darkness. Advances in radar technology are leading to higher-resolution “imaging radar” that can better classify objects, bridging the gap between traditional radar and LiDAR. These developments are often highlighted in AI Research / Prototypes News and are critical for the next generation of AVs.

Smarter Software and V2X Communication

Software is arguably the most important battleground. AI engineers are developing sophisticated algorithms that can filter out weather-related noise from sensor data, effectively teaching the AI to “see” through the snow or rain. Machine learning models are being trained specifically on vast datasets of adverse weather driving, allowing them to learn the unique patterns and risks associated with these conditions. The most promising long-term solution is Vehicle-to-Everything (V2X) communication. This technology allows vehicles to communicate directly with each other and with smart infrastructure (like traffic lights and road sensors). A car that hits a patch of ice could instantly warn all the vehicles behind it, a capability that would be transformative for safety. This concept is a cornerstone of the news surrounding Smart City / Infrastructure AI Gadgets News.

Operational Design Domains (ODDs)

A crucial best practice in the industry is the use of Operational Design Domains (ODDs). An ODD is a specific set of conditions under which an AV is designed to operate safely. This includes geographic boundaries, road types, lighting conditions, and, critically, weather. Rather than attempting to build a car that can drive through a hurricane from day one, companies define a limited ODD (e.g., “downtown Phoenix in dry conditions during daylight hours”). When conditions exceed the ODD—such as during a major storm—the entire fleet can be temporarily paused remotely. This is not a sign of failure but a mark of a mature, safety-first approach to deployment.

Section 4: The Road Ahead: Recommendations and Industry Implications

The path to creating truly all-weather autonomous vehicles is long, but the current challenges offer clear insights and a roadmap for the future.

Tips and Considerations for the Industry

- Embrace Redundancy: True sensor redundancy is key. This means not just having multiple sensors of the same type, but multiple *types* of sensors (e.g., cameras, LiDAR, radar, thermal) whose strengths and weaknesses offset each other.

- Prioritize Robust MRCs: The logic for entering a Minimum Risk Condition needs to be more sophisticated, capable of evaluating the safety of the potential stopping location before committing to it.

- Invest in Simulation and Testing: Companies must continue to invest heavily in virtual simulation environments that can replicate an infinite variety of adverse weather scenarios, supplementing real-world testing in a safe, controlled manner.

- Collaborate on Infrastructure: The AV industry should work closely with municipalities to advance Smart City / Infrastructure AI Gadgets News, pushing for the adoption of V2X technology that benefits all road users.

For the public and regulators, it is vital to maintain realistic expectations. The cautious approach of pausing operations during severe weather should be seen as a responsible safety measure. The evolution of this technology, much like advancements in AI Phone & Mobile Devices News or AI in Gaming Gadgets News, will be iterative. Each challenge encountered and overcome in the real world provides invaluable data that makes the entire system safer and more capable for the future.

Conclusion: A Sign of Maturity, Not Failure

The struggle of autonomous vehicles in adverse weather is not an indictment of the technology’s potential but rather a clear-eyed look at the immense complexity of the task. Incidents where robotaxis pause or pull over during a storm are not failures; they are demonstrations of a safety-first system working as designed within its current limitations. The journey to full, all-conditions autonomy requires a multi-faceted solution combining resilient hardware, intelligent software, smart infrastructure, and carefully planned operational strategies. As developers continue to gather data from these edge cases, they are systematically teaching their machines to navigate not just the perfect, sunny days, but the messy, unpredictable reality of the world we all live in. Overcoming the challenge of weather will be the true test that ultimately unlocks the transformative safety and efficiency benefits of a fully autonomous future.