The Unheard Revolution: AI’s Newfound Ability to Separate Voices from Noise

Imagine trying to transcribe a crucial business meeting from a recording where three people were talking over each other. Or picture editing a family video, desperately trying to isolate your child’s first words from the blare of a nearby television. For decades, this has been the audio engineer’s nightmare—a challenge known as the “cocktail party problem.” The human brain is remarkably adept at focusing on a single voice in a sea of noise, but replicating this ability in machines has been a monumental task. Until now. A new wave of artificial intelligence is fundamentally changing the game, offering the unprecedented ability to take a single, mixed audio recording and cleanly separate each individual speaker’s voice into its own distinct track. This breakthrough in AI Audio / Speakers News is not just an academic curiosity; it’s a foundational technology poised to reshape everything from our smart home devices to professional content creation and accessibility tools.

This article delves into the sophisticated world of AI-powered speaker separation. We will explore the deep learning techniques that make it possible, break down the mechanics of how a jumbled conversation is untangled, and examine the vast array of real-world applications already taking shape. From enhancing the capabilities of our digital assistants to providing powerful new tools for creators and improving the lives of those with hearing impairments, the ability to isolate sound is setting the stage for a more intelligent and personalized auditory future.

The Science Behind the Silence: Understanding the Core Technology

For years, the primary solution to isolating audio sources involved hardware. Engineers would use multiple microphones strategically placed to capture sound from different directions, a technique known as a microphone array. While effective in controlled environments, it’s impractical for the vast majority of real-world scenarios, from a spontaneous smartphone recording to analyzing archived audio. The true revolution lies in solving this problem with software, specifically using AI to perform “monaural source separation”—unmixing sounds from a single channel. This leap is a significant highlight in recent AI Research / Prototypes News, moving from theoretical models to practical applications.

From Signal Processing to Deep Learning

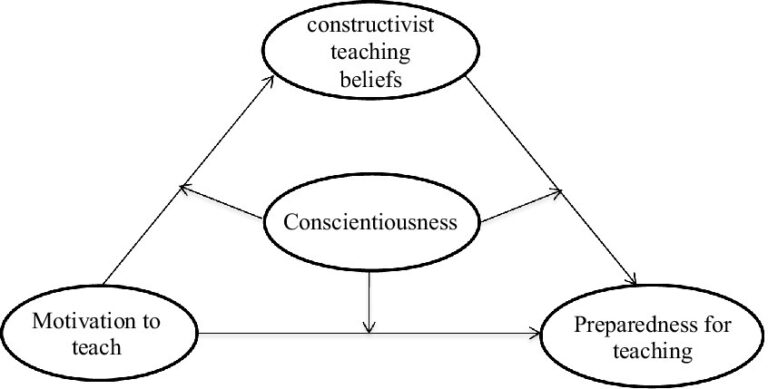

Traditional digital signal processing (DSP) techniques struggled with the complexity of overlapping human voices. Voices share similar frequency ranges, making them incredibly difficult to pull apart using simple filters. The breakthrough came with the application of deep neural networks (DNNs). These complex, multi-layered algorithms, inspired by the human brain, are trained on massive datasets. In this case, an AI model is fed countless hours of mixed audio (the “input”) along with the corresponding clean, isolated voice tracks (the “ground truth”). Through this process, the network learns the subtle, unique characteristics of human speech—the specific timbre, pitch, cadence, and harmonics that constitute a person’s unique “voiceprint.” It learns to recognize these patterns even when they are buried in a cacophony of other sounds.

How AI Models Learn to “Listen”

At the heart of this process is the spectrogram, a visual representation of sound that plots frequency against time, with color or intensity representing amplitude. To a neural network, a spectrogram is just an image. The AI is trained to look at the “image” of a mixed conversation and identify the distinct patterns belonging to each speaker. It then learns to generate a “mask” for each voice—a filter that, when applied to the original spectrogram, preserves the parts belonging to one speaker while discarding the rest. This shift from analyzing raw audio waves to interpreting visual spectrograms has allowed researchers to leverage powerful computer vision AI architectures for audio tasks. As these models become more efficient, they are being deployed on smaller hardware, a key topic in AI Edge Devices News, enabling on-device processing without needing to send data to the cloud.

Breaking Down the Process: From Mixed Audio to Clean Tracks

The journey from a single, messy audio file to multiple, clean, isolated voice tracks involves a sophisticated, multi-step pipeline. While the exact architecture can vary, the fundamental process follows a logical progression of analysis, separation, and reconstruction. Understanding this workflow reveals the elegance and power of the underlying AI.

Step 1: Pre-processing and Feature Extraction

The process begins by converting the raw audio waveform into a format more suitable for the neural network. This is typically the Short-Time Fourier Transform (STFT), which generates the spectrogram mentioned earlier. This conversion is crucial because it deconstructs the sound into its constituent frequencies over time, making the overlapping patterns of different speakers more apparent and easier for the AI to analyze. This feature extraction step is the foundation upon which the entire separation process is built.

Step 2: Speaker Identification and Masking

This is where the trained deep learning model does its heavy lifting. The model ingests the spectrogram and performs its core task: identifying which time-frequency “bins” (the pixels of the spectrogram) belong to which speaker. It effectively “paints by numbers,” assigning each tiny component of the sound to a specific source. Based on this analysis, it generates an individual mask for each identified speaker. An “ideal binary mask” would be a simple on/off filter, but more advanced models use a “soft mask” that assigns a ratio, allowing it to handle moments where voices overlap in the same frequency bin. This advanced capability is critical for creating natural-sounding separations and is a major focus of development in AI Assistants News, as digital assistants need to parse complex, overlapping commands.

Step 3: Reconstruction and Output

Once the individual masks are generated, they are multiplied with the original mixed spectrogram. This operation effectively filters out all other sounds, leaving only the spectrogram of a single speaker. This process is repeated for every speaker the model identified. Finally, these filtered spectrograms are converted back into audio waveforms using an inverse STFT. The result is a set of synchronized audio files, each containing the clean, isolated voice of one person from the original recording. This output can then be used for transcription, editing, or analysis, forming the basis for countless applications.

Beyond the Lab: Where AI Speaker Separation is Making a Difference

The implications of this technology extend far beyond the research lab, promising to enhance a vast ecosystem of smart devices and professional tools. Its ability to bring clarity to chaos is a game-changer across numerous industries.

Enhancing Communication and Productivity

In the corporate world, this technology is a massive boon. Automated meeting transcription services can now not only convert speech to text but also accurately attribute every line to the correct person, transforming messy recordings into structured, actionable minutes. This is a central theme in AI Office Devices News. Similarly, call centers can analyze customer and agent interactions separately for better quality assurance. For journalists and content creators, it’s a revolutionary tool. The latest AI Tools for Creators News highlights how podcasters can now easily fix audio where a host and guest speak over each other, and documentary filmmakers can clean up field interviews recorded in noisy, unpredictable environments.

Revolutionizing Smart Devices and Accessibility

The impact on consumer technology will be profound. The latest Smart Home AI News points towards a future where devices like smart speakers can distinguish between different family members, offering personalized responses, calendars, and music playlists. An AI Companion Device could attune itself to its primary user’s voice, ignoring stray commands from a television or other people in the room. This technology is also at the forefront of AI for Accessibility Devices News. Future hearing aids could dynamically identify the voice a user is facing, amplifying it while actively suppressing other conversations and background noise—a true real-world solution to the cocktail party problem. This innovation is a recurring topic in Health & BioAI Gadgets News and Wearables News, with smart earbuds promising similar capabilities.

Emerging Frontiers and Niche Applications

The applications are incredibly diverse. In Autonomous Vehicles News, systems are being developed to distinguish commands from the driver versus conversations from passengers. The latest Robotics News discusses how AI Personal Robots can be trained to respond only to their owners. Even niche areas are seeing benefits. AI in Gaming Gadgets News reports on software that can isolate teammates’ voices from chaotic in-game sound effects for crystal-clear communication. In security, this technology can enhance voice biometrics, a key topic in AI Security Gadgets News. Looking further, one can imagine applications in:

- AI Phone & Mobile Devices News: Superior noise cancellation during calls by isolating the user’s voice.

- AI Toys & Entertainment Gadgets News: Toys that bond with and respond only to a specific child’s voice.

- AI in Sports Gadgets News: Isolating a coach’s instructions from stadium noise for in-ear player devices.

- AI for Travel Gadgets News: Translation devices that can focus on a single speaker in a crowded train station.

This capability, often paired with computer vision as seen in AI-enabled Cameras & Vision News, creates a more context-aware and responsive technological ecosystem, driven by advancements across AI Sensors & IoT News.

Navigating the Challenges and Looking Ahead

Despite its rapid progress, AI speaker separation is not a solved problem. Several technical and ethical hurdles remain on the path to ubiquitous, flawless performance. Addressing these challenges will define the next generation of audio intelligence.

Current Limitations and Hurdles

The primary challenge is computational cost. The deep learning models required are resource-intensive, making real-time, on-device processing difficult for low-power hardware like wearables or basic smart speakers. Furthermore, while performance has improved dramatically, the models can still struggle with extremely noisy environments or when many people are speaking simultaneously. Another hurdle is handling an unknown number of speakers; while newer models are getting better at this “open-set” identification, many still perform best when the number of sources is pre-defined. Overcoming these limitations is a key goal for those following AI Edge Devices News.

Ethical Considerations and Privacy

With great power comes great responsibility. The ability to isolate an individual’s voice from a public or private recording raises significant privacy questions. Could this technology be used to eavesdrop on specific people in public spaces? Who owns the “voiceprint” data used to train these models, and how is it secured? As the technology becomes more widespread, clear regulations and ethical guidelines will be necessary to prevent misuse and ensure user trust. These conversations are critical as the technology integrates into devices like Smart Glasses and future AR/VR AI Gadgets.

The Future is Clear (and Separated)

The trajectory for this technology is clear: models will become faster, more accurate, and more efficient, enabling real-time separation on a wide range of devices. The next frontier, as hinted at in Neural Interfaces News, is the seamless integration with other AI systems. Imagine a tool that not only separates who said what but also analyzes the sentiment of each speaker, summarizes their key points, and translates their speech in real-time. This holistic understanding of conversations will be the true legacy of solving the cocktail party problem, making our digital world more perceptive and helpful than ever before.

Conclusion: A New Era of Auditory Intelligence

AI-powered speaker separation represents a monumental leap forward in our ability to process and understand the complex auditory world around us. By finally cracking the “cocktail party problem” with software, we have unlocked a foundational technology with far-reaching implications. From creating more intelligent and personalized AI Assistants and revolutionizing media production to building transformative accessibility tools, the applications are as vast as they are impactful. While challenges related to computational efficiency and ethical use remain, the pace of innovation is staggering. This technology is no longer a distant concept from a research paper; it is actively being integrated into the products and services we use every day, promising a future where our devices don’t just hear sound—they listen with understanding, clarity, and intelligence.