From Passive Observers to Active Participants: The New Era of AI-Powered Vision

For years, the smart home camera has been a digital sentinel, a passive observer perched on a wall or doorbell, dutifully recording motion and sending alerts. Its intelligence was rudimentary, evolving from simple pixel-change detection to basic object recognition—differentiating a person from a package, a car from a cat. While this was a significant leap forward, it represented the ceiling of what was possible with conventional AI. Today, we stand at the precipice of a monumental shift, a transformation fueled by the integration of powerful, multimodal Large Language Models (LLMs) into the very fabric of our smart home ecosystems. This isn’t just an incremental update; it’s a fundamental reimagining of the role these devices play in our lives. The latest Smart Home AI News reveals that cameras are no longer just for security; they are becoming context-aware, conversational partners capable of understanding, summarizing, and acting upon the visual world in ways previously confined to science fiction. This article explores this paradigm shift, dissecting the technology, its profound implications, and the future it heralds for everything from home security to personal robotics.

From Pixel Changes to Proactive Intelligence: The AI Camera Evolution

The journey of the smart camera is a story of escalating intelligence. Understanding this evolution is key to appreciating the quantum leap we are currently witnessing. The latest AI Cameras News isn’t just about better resolution; it’s about a deeper cognitive ability that changes the user experience entirely.

The Old Guard: Motion Detection and Basic Object Recognition

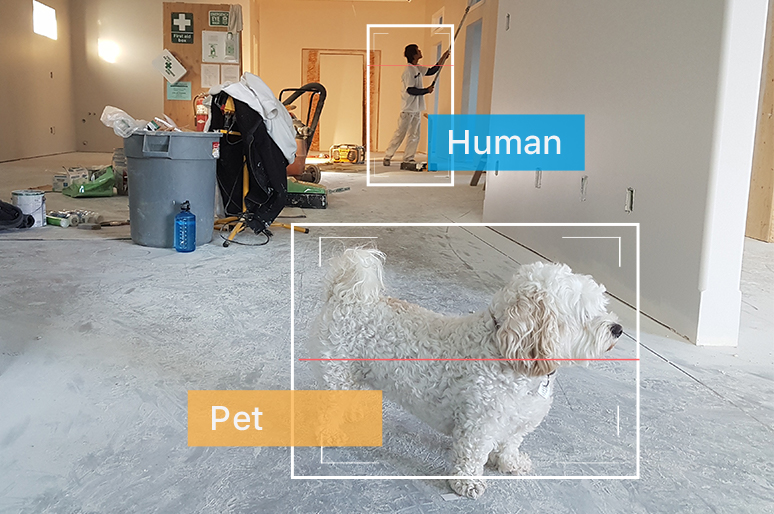

Early smart cameras operated on a simple principle: if enough pixels in the frame change, trigger an alert. This led to the infamous “notification fatigue,” where every swaying tree branch, passing shadow, or scurrying squirrel resulted in a phone buzz. The first wave of AI integration sought to solve this. Using machine learning models, cameras learned to identify specific objects. This was a welcome improvement, filtering out noise and providing more relevant alerts like “Person detected” or “Package delivered.” This generation of devices dominated the AI Security Gadgets News for years, establishing a baseline for what consumers expect from home monitoring. However, these systems lacked true understanding. They could tell you what they saw, but not the context, the sequence of events, or the relationship between objects.

The New Era: Multimodal AI and Conversational Interfaces

The current revolution is driven by the integration of sophisticated LLMs, the same technology powering advanced AI assistants. This marks a transition from simple recognition to genuine comprehension. The key difference lies in two core concepts: multimodality and conversational interaction.

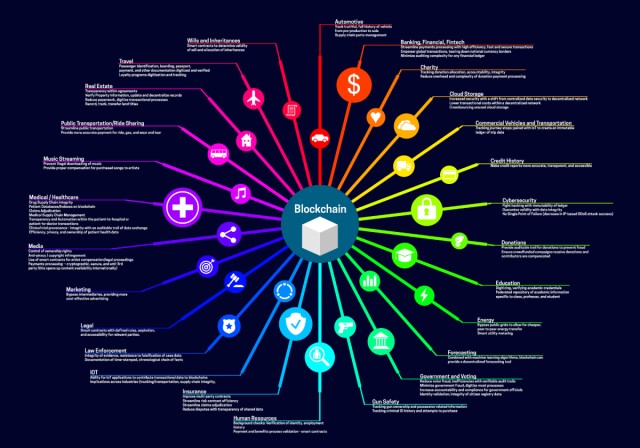

Multimodality means the AI can process and synthesize information from multiple sources simultaneously—video streams, audio feeds, and data from other connected devices covered in AI Sensors & IoT News. It doesn’t just see a person; it hears them speak, recognizes their voice, and understands their interaction with the environment. This holistic understanding is what elevates the device from a simple sensor to an intelligent agent. The latest AI-enabled Cameras & Vision News highlights this shift as the most significant development in consumer tech this decade. This also signals a major update in the world of AI Assistants News, as the intelligence moves from a disembodied voice to an entity with eyes and ears in your home.

The Technical Architecture of a Next-Generation Smart Home

This newfound intelligence isn’t magic; it’s the result of a sophisticated technical architecture that balances on-device processing with the immense power of the cloud. This hybrid approach is crucial for delivering a responsive, private, and deeply capable experience.

The Role of Edge vs. Cloud Computing

A central theme in recent AI Edge Devices News is the growing importance of local processing. For a smart camera, this means that time-sensitive and privacy-critical tasks happen directly on the device. Initial motion detection, person identification, and familiar face recognition can be handled by a dedicated neural processing unit (NPU) on the camera itself. This ensures that alerts are delivered with near-zero latency and that raw video footage of everyday moments doesn’t need to be constantly streamed to the cloud.

However, the true power of an LLM requires computational resources far beyond what a small home device can offer. This is where the cloud comes in. When a user asks a complex question or requests a summary, the relevant, pre-processed data is securely sent to the cloud for analysis by the full AI model. This hybrid model offers the best of both worlds: the speed and privacy of the edge with the profound intelligence of the cloud.

Semantic Search and Event Summarization

The most transformative feature enabled by this architecture is semantic search. Gone are the days of endlessly scrubbing through a timeline to find a specific moment. Users can now query their video history using natural, conversational language.

- Real-World Scenario 1 (Security): Instead of looking for a package delivery notification, you can ask, “Show me when the delivery driver in the blue shirt dropped off a large box yesterday.” The AI understands the concepts of “delivery driver,” “blue shirt,” and “large box” and can pinpoint the exact clip.

- Real-World Scenario 2 (Daily Life): You can ask, “Where did the kids leave the red soccer ball in the backyard?” The AI can scan recent footage to find the last time the object was seen and in what location.

Event summarization takes this a step further. You can request, “Give me a summary of all activity in the driveway last night.” The AI will analyze hours of footage, ignore irrelevant events like rustling leaves or a passing car, and present a concise summary: “A vehicle arrived at 10:15 PM. Two people exited and entered the house. A raccoon was seen near the trash cans at 2:30 AM.” This is a game-changer for AI Monitoring Devices News, turning raw data into actionable intelligence.

Proactive Automation and Ecosystem Integration

With contextual understanding, the AI camera becomes the central hub for true home automation. It can trigger complex routines based on nuanced events, not just simple motion. For example, the camera recognizing your specific car pulling into the driveway (a nod to Autonomous Vehicles News) can initiate a “Welcome Home” scene: unlocking the door, activating specific AI Lighting Gadgets News, adjusting the thermostat, and playing a welcome message through your smart speakers, a key topic in AI Audio / Speakers News. This level of integration, where the visual context provided by the camera informs the entire smart home, is the ultimate promise of a connected ecosystem.

More Than a Watchful Eye: New Frontiers for AI-Powered Vision

While security remains a primary function, the integration of advanced AI unlocks a vast array of applications that extend far beyond intrusion detection. The camera evolves into an all-purpose home assistant and caregiver.

The AI Companion and Home Organizer

The camera’s ability to track objects and understand routines makes it an invaluable organizational tool. It can help you find misplaced items, check if you left a window open, or even monitor your pantry. The intersection with AI Pet Tech News is particularly exciting. You can now ask, “Did the dog walker come today?” or set up an intelligent alert: “Let me know if the cat jumps on the kitchen counter.” This transforms the camera from a security device into a helpful part of the family’s daily life, even connecting to Robotics Vacuum News by helping a vacuum understand that a pet has made a mess that needs immediate attention.

Accessibility and Assisted Living

Perhaps the most impactful application lies in assisted living and accessibility. For families caring for elderly relatives, these cameras offer peace of mind without being overly intrusive. This is a critical area of development in Health & BioAI Gadgets News. Instead of constant check-ins, you can set up intelligent, non-invasive alerts like, “Notify me if Dad hasn’t gotten up from his chair by 10 AM,” or, more critically, “Alert me immediately if you detect a fall.” This technology, a core part of AI for Accessibility Devices News, can provide independence for seniors and assurance for their loved ones, all without requiring the user to wear a device.

The Future of Robotics and Automation

Looking ahead, these intelligent vision systems will be the eyes for the next generation of home robotics. A robot vacuum will no longer just bump its way around; it will use the home’s network of cameras to understand the real-time layout of a room, identifying a newly dropped backpack or a spilled drink and navigating accordingly. As we see in AI Personal Robots News, a future home robot could be tasked with, “Please bring me the book I left on the coffee table.” The robot would query the home’s AI, which uses the cameras to locate the book and guide the robot to it. This synergy between ambient vision and mobile robotics will define the truly automated home of the future.

Adopting Advanced AI: Practical Tips and Potential Pitfalls

As with any powerful technology, the adoption of advanced AI in the home comes with critical considerations. Navigating this new landscape requires a proactive approach to privacy, cost, and the inherent limitations of the technology.

Privacy and Data Security: The Highest Priority

The idea of an always-on, AI-powered camera in your home rightfully raises privacy concerns. Before investing in any ecosystem, it is crucial to scrutinize the manufacturer’s privacy policies. Best practices include:

- End-to-End Encryption: Ensure that your video streams are encrypted both in transit and at rest on the company’s servers.

- Two-Factor Authentication (2FA): Always enable 2FA on your account to prevent unauthorized access.

- Understanding Data Usage: Be clear on how your data is used for AI model training and what options you have to opt-out.

- Prioritizing On-Device Processing: Favor systems that do as much processing as possible locally on AI Edge Devices, minimizing the amount of sensitive data sent to the cloud.

The Cost of Intelligence: Subscriptions and Hardware

Unsurprisingly, these advanced cognitive features often come at a price. The computational costs associated with running large AI models mean that the most powerful capabilities, like semantic search and detailed event summaries, will almost certainly be locked behind a premium subscription tier. Consumers should factor this recurring cost into their purchasing decisions. Furthermore, the on-device processing requirements may necessitate newer, more expensive hardware, making this an upgrade that involves both camera and service.

Over-reliance and Algorithmic Bias

Finally, it’s important to maintain a healthy perspective. While incredibly powerful, these AI systems are not infallible. They can make mistakes, misinterpret events, or be affected by algorithmic biases. It’s crucial to use them as a tool to enhance awareness, not as a complete replacement for human judgment. Users should be aware of their limitations and provide feedback to manufacturers to help improve the systems over time.

Conclusion: A New Definition of “Smart”

The integration of advanced, multimodal AI into smart home cameras represents a pivotal moment in consumer technology. We are moving beyond the era of simple alerts and into a future of active intelligence, where our devices don’t just see, but understand. This “Gemini effect”—the infusion of powerful LLMs into everyday gadgets—redefines what a smart device can be. The benefits are profound, promising not only a new level of security but also unprecedented convenience, powerful automation, and meaningful applications in areas like elder care and personal organization. While navigating the critical challenges of privacy and cost is paramount, the trajectory is clear. The smart camera is no longer just a security gadget; it is becoming the perceptive heart of a truly intelligent home, setting a new standard for how we interact with the technology that surrounds us and laying the groundwork for future innovations in everything from AI Personal Robots to Smart City / Infrastructure AI Gadgets.