For years, AI assistants like Siri, Alexa, and Google Assistant have been a staple of modern technology, adept at setting timers, playing music, and answering basic factual questions. While undeniably useful, their capabilities have often felt rigid and transactional, confined to a predefined set of commands. They understand requests, but they don’t truly comprehend context or engage in nuanced conversation. Now, a seismic shift is underway, driven by the same technology that powers generative AI platforms like ChatGPT. Major technology companies are in a race to fundamentally rebuild their digital assistants from the ground up, integrating the power of Large Language Models (LLMs). This evolution promises to transform them from simple task-executors into truly intelligent, conversational partners, a development poised to redefine personal productivity, business operations, and our entire interaction with the digital world. This is the most significant update in AI Assistants News in a decade, with implications that will ripple across the entire tech landscape.

The Architectural Evolution: From Command-and-Control to Conversational Intelligence

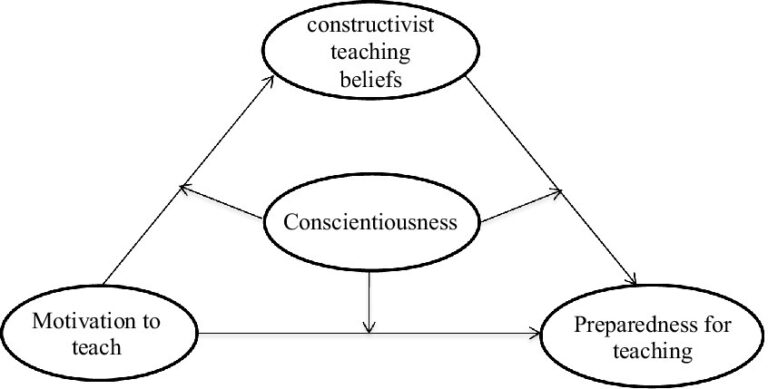

The impending revolution in AI assistants is not merely an incremental update; it’s a complete architectural overhaul. To appreciate the magnitude of this change, it’s essential to understand the fundamental differences between the old guard of digital assistants and the new, LLM-powered paradigm.

Understanding the Old Guard: Intent-Based Assistants

Traditional AI assistants operate on a model centered around Natural Language Understanding (NLU). When you speak a command, the system’s primary goal is to decipher two things: your intent and the associated entities. For example, in the phrase, “Hey Siri, what’s the weather like in London?” the NLU model identifies:

- Intent: `get_weather`

- Entity: `location: London`

This intent-entity pair then triggers a specific, pre-programmed API call or function to fetch the weather data for London and read it back to you. While effective for straightforward tasks, this model has significant limitations. It struggles with ambiguity, lacks memory of previous interactions, and cannot handle complex, multi-step requests that haven’t been explicitly coded. It’s a system of rigid commands and responses, not a fluid conversation.

The New Paradigm: LLM-Powered Generative Assistants

LLMs operate on a completely different principle. Instead of just identifying intent, they process language to understand context, nuance, and relationships within vast datasets. An LLM-powered assistant doesn’t just match a command to a function; it reasons about the request and generates a comprehensive, human-like response or action plan. This unlocks a new dimension of capability. A user could say, “I’m planning a trip to London next week, what should I pack and what are some indoor activities I can do if it rains?” An intent-based assistant would fail. An LLM-powered assistant could:

- Check the weather forecast for London for the following week.

- Based on the predicted temperature and precipitation, generate a suggested packing list.

- Cross-reference the location with a database of attractions to suggest museums, galleries, and indoor markets.

- Present all of this information in a natural, conversational format.

This leap from task execution to task composition is the core of the revolution, with the latest AI Phone & Mobile Devices News indicating that this technology will soon be a standard feature on flagship devices.

Hybrid Models: The Bridge to the Future

In the near term, the most likely approach is a hybrid model. This architecture uses the LLM as a central “reasoning engine” or conversational router. For creative or complex queries, the LLM handles the response directly. For deterministic tasks where accuracy is paramount (e.g., “Call Mom” or “Set an alarm for 7 AM”), the LLM would understand the request and then delegate the execution to the older, highly reliable intent-based APIs. This hybrid approach mitigates the risk of LLM “hallucinations” (generating incorrect information) for critical functions while still providing the immense benefits of generative conversational AI.

ChatGPT interface – Customize your interface for ChatGPT web -> custom CSS inside …

ChatGPT interface – Customize your interface for ChatGPT web -> custom CSS inside …Beyond the Smartphone: A New Era for Connected Devices

While the smartphone will likely be the initial proving ground for these next-generation assistants, the true impact will be felt as this intelligence permeates the entire ecosystem of connected devices. The assistant will become an ambient computing layer, unifying our disparate gadgets into a cohesive, intelligent network.

The Smart Home Gets Smarter

The current smart home is a collection of devices we command individually. The latest Smart Home AI News points towards a future where the home operates holistically. Instead of saying, “Turn on the kitchen lights to 50%,” you could say, “I’m about to start cooking dinner.” The AI, knowing the time of day and your habits, could intelligently adjust the lights via its connection to AI Lighting Gadgets News, preheat the oven by interfacing with Smart Appliances News, and play your favorite cooking playlist on a smart speaker. This contextual awareness transforms the home from a set of remote-controlled switches into a responsive environment.

Wearables and Personal Companions

The implications for personal devices are profound. According to recent Wearables News, devices like smartwatches and augmented reality glasses are set to become powerful AI interfaces. Imagine a runner asking their watch, “Based on my sleep data from last night and my current heart rate, how should I pace myself to beat my 5k record?” The assistant could analyze biometric data in real-time and provide live coaching. This trend in AI Fitness Devices News will make health and wellness technology deeply personalized. Similarly, emerging Smart Glasses News suggests that an LLM assistant could provide real-time translations or identify objects in your field of view, making it a true AI Companion Device. This also has massive potential for AI for Accessibility Devices News, where an assistant could describe a user’s surroundings in rich detail.

The Future of Mobility and Robotics

This conversational intelligence will fundamentally change how we interact with vehicles and robots. In the world of Autonomous Vehicles News, a driver could have a natural dialogue with their car, asking it to find complex routes that avoid traffic while accommodating multiple stops for errands. At home, the latest Robotics News shows that a personal robot, powered by an LLM and advanced AI-enabled Cameras & Vision News, could understand a command like, “The dog knocked over the plant in the living room, can you clean it up?” It would then navigate to the room, identify the mess, and use the appropriate tools to clean it, a significant upgrade from the simple mapping of today’s Robotics Vacuum News.

The AI Assistant as a True Workplace Partner

Perhaps the most significant economic impact of LLM-powered assistants will be in the enterprise. By integrating with workplace software and internal data, these assistants can evolve into indispensable productivity partners, automating complex workflows and democratizing data analysis.

Automating Complex Workflows

A key theme in AI Office Devices News is the concept of the AI-powered agent. An employee could give a high-level directive such as: “Review the sales data from the Q3 report, summarize the key trends in a three-paragraph brief, create a presentation with charts illustrating these trends, and draft an email to the sales team with the summary and a link to the presentation.” The AI assistant would then orchestrate this entire workflow, interacting with a BI tool, a word processor, presentation software, and an email client seamlessly. This capability, a core focus of AI Tools for Creators News, moves beyond simple automation to genuine task delegation.

Data Analysis and Insights on Demand

LLMs excel at understanding and summarizing unstructured data. When connected to a company’s internal knowledge base, an LLM-powered assistant can act as an instant analyst. A project manager could ask, “What were the main customer complaints about Project Titan, and what were the engineering team’s proposed solutions?” The assistant could parse through meeting transcripts, support tickets, and internal documents to provide a concise, accurate summary in seconds, a task that would have previously taken hours of manual research.

Case Study: A Day with an Enterprise AI Assistant

Consider a marketing manager, Sarah. Her day begins by asking her assistant, “Summarize my unread emails and highlight anything urgent from the product team.” The AI provides a verbal brief. Later, she’s in a brainstorming session and asks the assistant, connected to a smart display, to “Generate some creative slogans for our new eco-friendly product line, focusing on sustainability and modern design.” In the afternoon, she asks it to “Analyze the sentiment of our latest social media campaign and compare its engagement metrics to the previous one.” The assistant generates a report with charts and key takeaways. This level of integration transforms the assistant from a novelty into a core component of the business workflow.

Navigating the Transition: Opportunities and Obstacles

The path to this LLM-powered future is filled with immense opportunity, but it is not without significant technical and ethical challenges. Both developers and consumers need to navigate this transition thoughtfully.

For Developers and Businesses:

- Best Practice – Embrace Hybrid Architectures: Don’t throw out the old systems entirely. Use reliable, deterministic APIs for critical functions while leveraging LLMs for creativity and complex reasoning. This balances innovation with the need for accuracy.

- Best Practice – Prioritize Security and Privacy: The latest AI Security Gadgets News emphasizes the risk of sending sensitive personal or corporate data to third-party cloud services. Businesses should explore solutions that run on-device or in a private cloud. This is a central theme in AI Edge Devices News, where processing happens locally to enhance speed and security.

- Common Pitfall – Underestimating Data Governance: An assistant connected to internal company data is powerful but risky. Strict access controls and data governance policies are essential to prevent leaks or unauthorized access. This is where developments in AI Monitoring Devices and secure AI Sensors & IoT News will be critical.

For Consumers:

- Tip – Understand Your Data Footprint: Be conscious of the permissions you grant these new assistants. Understand what data is being collected and how it’s being used to train the models.

- Consideration – The Art of Prompting: Interacting with a generative assistant is a new skill. Learning how to phrase questions and commands to get the best results (prompt engineering) will be key to unlocking its full potential.

The Broader Technological Horizon

This is just the beginning. The ongoing AI Research / Prototypes News continues to push the boundaries of what’s possible. In the future, we may see assistants that can proactively anticipate our needs based on our behavior and environment. The convergence with other fields, such as Neural Interfaces News, could one day allow for seamless, thought-based interaction with our digital world, further personalizing everything from AI in Gaming Gadgets News to advanced Health & BioAI Gadgets News.

Conclusion: The Dawn of the Conversational Age

We are standing at the inflection point of a new technological era. The integration of Large Language Models into AI assistants marks the end of the command-and-control era and the dawn of a truly conversational age. This shift will dissolve the boundaries between applications and create a unified, intelligent layer that orchestrates our digital lives. From making our homes and cars more responsive to revolutionizing workplace productivity, the impact will be profound and far-reaching. The race to perfect this technology is not just about building a better voice assistant; it’s about defining the next major paradigm in human-computer interaction. The companies that succeed will not only lead the market but will fundamentally change our relationship with technology itself.