The relentless pace of innovation in artificial intelligence has once again breached a new frontier, this time targeting the very core of the digital world: software development. For years, developers have leveraged AI-powered tools for simple code completion and basic suggestions. However, the landscape is undergoing a seismic shift. A new generation of highly sophisticated AI coding assistants is emerging, moving beyond mere syntax helpers to become true collaborative partners in the complex art of software engineering. These advanced models, appearing in competitive arenas and private betas, demonstrate a profound understanding of code, architecture, and developer intent, heralding a new era of productivity and creativity. This evolution isn’t just an incremental update; it represents a fundamental change in how we build, debug, and maintain software, with far-reaching implications across every technological sector.

The Dawn of the AI Co-Architect: Beyond Simple Code Completion

The evolution from first-generation AI assistants to the current wave is akin to the difference between a spellchecker and a seasoned editor. Early tools like the initial versions of GitHub Copilot were revolutionary, offering line-by-line suggestions and completing boilerplate code. While incredibly useful, their capabilities were often limited to the immediate context of a single file. The latest models, however, operate on a completely different level, exhibiting capabilities that were purely theoretical just a short time ago. This shift is a major topic in AI Assistants News and is set to impact everything from mobile app development, as covered in AI Phone & Mobile Devices News, to the complex systems powering our cities, a key focus for Smart City / Infrastructure AI Gadgets News.

Key Capabilities of Next-Generation Models

What truly sets these new assistants apart is their holistic understanding of a project. They are not just code generators; they are becoming system architects, debuggers, and refactoring experts. Here are some of the defining characteristics:

- Full-Stack Project Awareness: Unlike their predecessors, these models can ingest and reason across an entire codebase. Given access to a multi-file repository, they can understand the intricate relationships between the frontend, backend, database schemas, and deployment configurations. This allows them to generate code that is not only syntactically correct but also contextually appropriate for the entire application.

- Complex Reasoning and Debugging: These assistants can trace logic flows across multiple function calls and files to identify the root cause of complex bugs. A developer can present a stack trace or a vague description of an issue, and the model can analyze the relevant code sections to propose a precise fix, complete with an explanation of its reasoning. This is a game-changer for maintaining legacy systems and onboarding new engineers.

- Architectural Refactoring: One of the most impressive new skills is the ability to perform large-scale refactoring. A developer could issue a high-level command like, “Convert this monolithic Express.js API into a microservices architecture with separate services for users, products, and orders,” and the model can generate the new directory structure, boilerplate for each service, and the necessary API gateway configurations. This capability will accelerate modernization efforts across the industry, from creating firmware for AI Edge Devices News to building control systems discussed in Drones & AI News.

- Multi-Modal Understanding: The integration of vision capabilities is a significant leap. Developers can now provide a UI mockup from a tool like Figma or even a hand-drawn sketch, and the AI can generate the corresponding HTML/CSS and JavaScript/React/SwiftUI code. This drastically shortens the gap between design and implementation, a crucial advantage in fast-paced fields like AR/VR AI Gadgets News and the development of interfaces for AI Companion Devices News.

This leap in capability is driven by advancements in model architecture, massive increases in context window sizes (allowing them to process hundreds of thousands of lines of code at once), and highly specialized training datasets that include not just open-source code but also technical documentation, programming textbooks, and architectural whitepapers. This is a core topic in AI Research / Prototypes News, as the industry pushes the boundaries of what’s possible.

A Technical Deep Dive: What’s Powering the Revolution?

The remarkable performance of these next-generation coding assistants isn’t magic; it’s the result of converging breakthroughs in several key areas of AI research. Understanding these underlying technologies helps to appreciate their power and anticipate future developments. The advancements are particularly relevant for specialized fields like Health & BioAI Gadgets News, where code reliability is paramount, and Autonomous Vehicles News, which relies on incredibly complex software stacks.

Architectural Innovations and Training Data

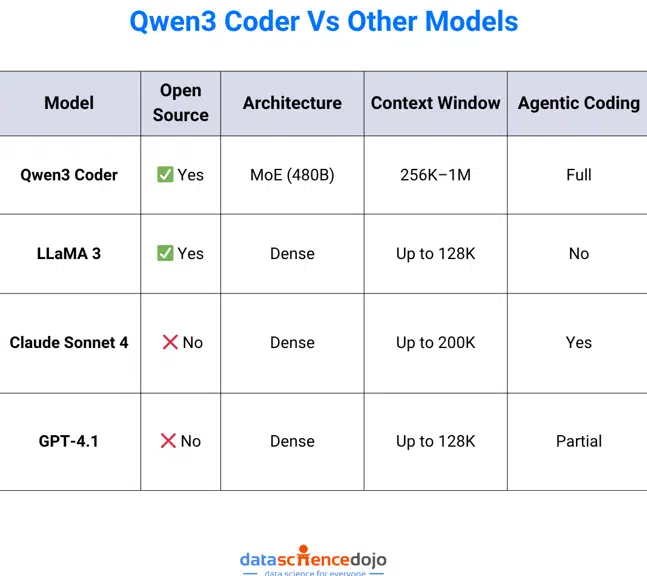

At the heart of these models are more sophisticated neural network architectures. While still based on the Transformer model, they incorporate techniques like Mixture of Experts (MoE), which allows the model to activate only the most relevant parts of its network for a given task. This leads to more efficient computation and a greater capacity for specialized knowledge. For coding, this means a model can have “experts” trained specifically on Python, Rust, SQL, and system design principles, leading to higher-quality output.

Furthermore, the training data has become far more curated. Instead of just scraping public repositories, AI labs are creating datasets that pair code with high-quality explanations, link code to its corresponding documentation, and map bug reports to the commits that fixed them. This “meta-knowledge” allows the models to learn not just the “what” of coding (syntax) but the “why” (design patterns, best practices, and intent). This deep understanding is crucial for developing software for sensitive applications, a recurring theme in AI Security Gadgets News and for devices that monitor critical infrastructure, as seen in AI for Energy / Utilities Gadgets News.

Real-World Scenario: Building a Smart Home Automation Service

Let’s consider a practical example. A developer is tasked with creating a new service for a smart home platform, a hot topic in Smart Home AI News. The goal is to create a backend that can receive data from various AI Sensors & IoT News devices (like temperature sensors and smart locks) and trigger actions on other devices (like AI Lighting Gadgets News).

- Initial Prompt: The developer provides a high-level prompt to the AI assistant: “Scaffold a new microservice in Go for a smart home automation system. It should use gRPC for communication. Create protobuf definitions for a `SensorReading` message (with device_id, timestamp, and a `oneof` for data types like temperature, humidity, and door_status) and a `ControlAction` message. Set up a basic server that logs incoming sensor readings.”

- AI-Generated Output: The assistant generates a complete project structure:

/protodirectory with a well-formattedsmarthome.protofile./cmd/serverdirectory with amain.gofile containing the gRPC server setup.- A

go.modfile with the necessary dependencies (gRPC, protobuf). - A Makefile or shell script to compile the protobuf definitions.

- Iterative Refinement: The developer then asks for more features. “Add a new gRPC endpoint called `SetAutomationRule`. It should take a rule that triggers a `ControlAction` on a specific light when a temperature sensor reads above 25 degrees Celsius. Store these rules in-memory for now.”

- Complex Logic Generation: The AI modifies the protobuf, adds the new RPC to the server, and implements the in-memory logic to store and evaluate the rules. It understands the relationship between the sensor data and the control actions, creating the core business logic of the service. This same process can be applied to build software for Robotics News, creating control loops for AI Personal Robots, or even for fun applications discussed in AI Toys & Entertainment Gadgets News.

This example showcases a collaborative workflow where the developer guides the high-level architecture while the AI handles the meticulous and time-consuming implementation details. This synergy is also transforming niche areas like AI Pet Tech News and AI Gardening / Farming Gadgets News by making sophisticated software development more accessible.

Implications for the Tech Industry and Developer Roles

The widespread adoption of these powerful AI assistants will have profound and lasting effects on the technology industry. It’s not just about writing code faster; it’s about changing what we build, how we build it, and what skills are most valuable for a software engineer. The impact will be felt everywhere, from the creation of new user experiences covered in AI Audio / Speakers News to the development of life-changing technologies discussed in AI for Accessibility Devices News.

Shifting Skillsets and a Focus on Architecture

As AI handles more of the low-level coding, the role of the human developer will elevate. The emphasis will shift from writing perfect syntax to high-level problem-solving, system design, and product vision. Developers will spend less time on boilerplate and more time on:

- Architectural Design: Defining the boundaries of microservices, choosing the right database technology, and designing resilient, scalable systems.

- Prompt Engineering: The ability to articulate complex requirements to an AI in a clear, unambiguous way will become a critical skill.

- Critical Code Review and Validation: AI-generated code is not infallible. It can introduce subtle bugs, security flaws, or performance bottlenecks. The developer’s role as a critical reviewer and quality gatekeeper becomes more important than ever. This is especially true for mission-critical systems in fields like AI Monitoring Devices News.

- Product and Business Logic: Understanding the user’s needs and translating them into technical requirements remains a uniquely human skill. Developers will be expected to contribute more to product strategy.

This evolution will also impact education, a topic central to AI Education Gadgets News. Curriculums will need to adapt, focusing more on computer science fundamentals, design patterns, and critical thinking, rather than just rote memorization of language syntax. The impact is also seen in creative fields, with a surge of interest in AI Tools for Creators News and even in fashion, as highlighted by AI in Fashion / Wearable Tech News.

Accelerating Innovation Across All Sectors

By lowering the barrier to creating complex software, these AI assistants will act as a catalyst for innovation. Startups and small teams can now build sophisticated products that would have previously required a large engineering department. We can expect to see an explosion of new applications in areas like:

- Personalized Health and Wellness: Development of apps for AI Fitness Devices News and AI Sleep / Wellness Gadgets News will accelerate, offering more sophisticated and data-driven insights.

- Smarter Consumer Gadgets: From AI Kitchen Gadgets News and Smart Appliances News to Robotics Vacuum News, the intelligence embedded in our everyday devices will grow exponentially.

- Enhanced Entertainment: The worlds of AI in Gaming Gadgets News and virtual reality will become more immersive and complex, powered by AI-assisted content creation.

- Specialized B2B Tools: Industries like sports and travel will see a new wave of custom software, as covered by AI in Sports Gadgets News and AI for Travel Gadgets News, tailored to their unique needs.

Even physical devices are becoming smarter. The software controlling AI Cameras News and the computer vision systems discussed in AI-enabled Cameras & Vision News are becoming more advanced, thanks to the ability to rapidly prototype and deploy complex algorithms. The same applies to emerging fields like Neural Interfaces News, where software is the critical link between biology and technology.

Best Practices and Navigating the Future

To harness the full potential of these next-generation AI coding assistants, developers and organizations must adopt a strategic approach. Simply dropping the tool into an existing workflow without changing processes will yield suboptimal results. It requires a new mindset—one of collaboration, verification, and continuous learning.

Recommendations for Developers

- Treat it as a Pair Programmer: The AI is your co-pilot, not your autopilot. Engage it in a dialogue. Ask it to explain its code, suggest alternatives, and debate the trade-offs of different approaches. Don’t blindly accept its first suggestion.

- Master the Art of the Prompt: Your ability to get good output is directly proportional to the quality of your input. Be specific. Provide context, examples, and constraints. Break down large problems into smaller, manageable requests.

- Always Verify and Test: Never commit AI-generated code without thoroughly reviewing, understanding, and testing it. The responsibility for the code’s quality, security, and performance ultimately lies with you, the human developer.

- Focus on High-Level Skills: Double down on learning system design, software architecture, and domain-specific knowledge. These are the areas where your human expertise provides the most value.

Organizational Considerations

For businesses and team leads, integrating these tools requires careful planning. It’s essential to establish clear guidelines on code review standards for AI-generated code. Security teams must be involved to assess the risks of using third-party models that may have been trained on insecure code. Investing in training for developers on how to use these tools effectively is also crucial. This applies to all office environments, a topic of interest in AI Office Devices News, where productivity is key.

The rise of these assistants is a watershed moment. It promises to amplify developer productivity to an unprecedented degree, but it also demands a new level of diligence and a shift in focus. The most successful engineers will be those who embrace these tools as powerful collaborators, using them to offload tedious work and free up their cognitive bandwidth for the creative, architectural challenges that define great software.

Conclusion

We are standing at the threshold of a new era in software development. The emergence of AI assistants with a deep, architectural understanding of code is not just an incremental improvement—it is a paradigm shift. These tools are transforming the developer’s role from a line-by-line coder to a high-level architect, problem-solver, and system orchestrator. By automating boilerplate, accelerating debugging, and even scaffolding entire applications, they are poised to unlock a new wave of innovation across every industry imaginable. However, this powerful new capability comes with a responsibility. The future of software engineering will be defined by a symbiotic partnership between human creativity and artificial intelligence, where the most valuable skill is not just the ability to write code, but the wisdom to guide, validate, and shape the powerful outputs of our new AI collaborators.