The world of artificial intelligence is undergoing a profound transformation. For years, the immense computational power required for AI was confined to the cloud, with data being sent to massive server farms for processing. Today, a paradigm shift is well underway, moving intelligence from the centralized cloud to the decentralized “edge”—the myriad of devices we interact with daily. This migration is not just a trend; it’s a revolution fueled by the emergence of powerful, compact, and energy-efficient hardware. At the forefront of this movement are all-in-one AI edge development kits, platforms that are democratizing access to sophisticated AI capabilities and dramatically accelerating the pace of innovation across countless industries. These integrated kits are no longer just for researchers; they are becoming the go-to tools for engineers, startups, and creators looking to build the next wave of intelligent products, from smart home hubs to industrial monitoring systems.

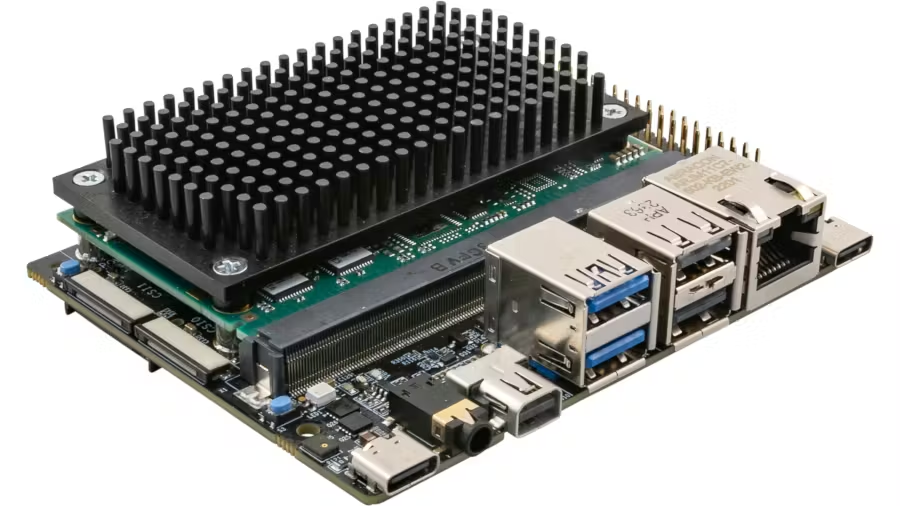

The Anatomy of a Modern Edge AI Platform

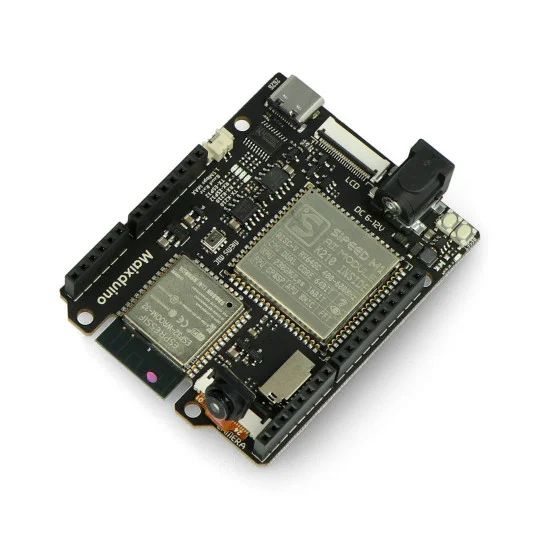

The latest advancements in AI Edge Devices News are centered around the concept of integration. Instead of forcing developers to piece together a microcontroller, a camera module, a microphone, and connectivity boards, new all-in-one platforms provide a cohesive, ready-to-use solution. This not only simplifies the prototyping process but also ensures that the hardware components are optimized to work together seamlessly. Understanding the core elements of these kits is key to appreciating their transformative potential.

The Brains: MCU with an Integrated Neural Processing Unit (NPU)

At the heart of these new devices is a powerful System-on-Chip (SoC) that combines a traditional microcontroller unit (MCU) with a dedicated Neural Processing Unit (NPU). The MCU handles general-purpose tasks, real-time control, and system management, while the NPU is a specialized hardware accelerator designed specifically for running machine learning models. Unlike a general-purpose CPU or even a GPU, an NPU’s architecture is optimized for the mathematical operations core to AI, such as matrix multiplications and convolutions. This results in a massive performance boost for AI tasks while consuming a fraction of the power. Performance is often measured in GOPS (Giga Operations Per Second) or TOPS (Tera Operations Per Second), and modern edge MCUs can now deliver hundreds of GOPS, a figure once reserved for much larger processors. This fusion is critical for the latest AI Phone & Mobile Devices News and the development of next-gen AI Companion Devices News.

The Senses: Advanced Vision and Audio Sensors

An AI is only as good as the data it receives. Recognizing this, modern development kits are integrating high-fidelity sensors directly onto the board. A key highlight in recent AI-enabled Cameras & Vision News is the inclusion of global shutter cameras. Unlike the more common rolling shutter cameras found in smartphones, which can cause distortion and “jello” effects when capturing fast-moving objects, a global shutter captures the entire image frame at once. This is a non-negotiable requirement for precision-critical applications like industrial robotics, high-speed barcode scanning, and real-time object tracking in Drones & AI News. On the audio front, high-quality microphone arrays are becoming standard, enabling sophisticated applications like voice command recognition, sound source localization, and noise cancellation, driving innovation in AI Audio / Speakers News and AI Assistants News.

The Reflexes: Ultra-Low-Power Co-Processing

One of the biggest challenges in battery-powered edge devices is enabling “always-on” sensing without draining the battery. The solution lies in intelligent co-processing. A groundbreaking technology appearing in new sensors is the In-Sensor Processing Unit (ISPU). An ISPU is a tiny, programmable processor embedded directly within the sensor (e.g., an Inertial Measurement Unit or IMU). It can run highly optimized machine learning algorithms—like gesture recognition or activity detection—using minuscule amounts of power. The main MCU can remain in a deep sleep state, only waking up when the ISPU detects a significant event. This architecture is a game-changer for Wearables News, AI Fitness Devices News, and Health & BioAI Gadgets News, enabling devices that can continuously monitor for gestures or falls while lasting for weeks on a single charge.

A Deeper Dive: Unpacking the Core Technologies

To truly grasp the impact of these all-in-one kits, it’s essential to look beyond the spec sheet and understand the underlying technology. The synergy between the NPU, advanced sensors, and low-power co-processors creates a platform that is far greater than the sum of its parts, paving the way for applications previously thought impossible on a compact, low-cost device.

Demystifying the NPU: Efficient AI Acceleration

What makes an NPU so effective for AI? It’s all about specialized design. While a CPU is a jack-of-all-trades, an NPU is a master of one: parallel computation for neural networks. It contains a large number of Multiply-Accumulate (MAC) units that can perform thousands of calculations simultaneously. Furthermore, NPUs are designed to work efficiently with quantized models. Quantization is the process of converting a model’s parameters (weights) from 32-bit floating-point numbers to lower-precision 8-bit integers. This drastically reduces the model’s size and memory footprint, making it feasible to run on a resource-constrained MCU. The software toolchains provided with these kits often automate this optimization process, making it accessible even to developers who aren’t experts in machine learning model compression. This efficiency is what allows complex vision models to run locally, fueling the latest AI Security Gadgets News and enabling smarter AI Personal Robots.

Global Shutter vs. Rolling Shutter: A Critical Choice for AI Vision

The choice of image sensor can make or break a computer vision application. A rolling shutter sensor scans an image line by line, from top to bottom. If an object moves quickly during this scan, the resulting image appears skewed or warped. Imagine trying to read a barcode on a fast-moving conveyor belt or track a drone in flight; a rolling shutter would produce unusable data. A global shutter, however, exposes all pixels simultaneously, capturing a perfect, distortion-free snapshot in time. This fidelity is crucial for any application requiring metric accuracy or the analysis of dynamic scenes. This is a major topic in Robotics News, as it allows robots to perceive and interact with their environment more reliably. It also impacts AI in Sports Gadgets News, where analyzing the form of a fast-moving athlete requires pristine, un-warped frames.

The Power of In-Sensor Processing for an Always-On World

The concept of processing data at the source is a cornerstone of efficient edge computing. The ISPU in an IMU is a prime example. Consider an application for AI for Accessibility Devices News, such as a wearable that detects when an elderly person has fallen. A traditional approach would require the main MCU to constantly poll the accelerometer data, consuming significant power. With an ISPU, a highly optimized fall-detection algorithm runs directly on the sensor itself. The sensor sips microwatts of power, and the main processor only activates when a fall is confirmed. This same principle applies to gesture controls for AR/VR AI Gadgets News, keyword spotting for voice assistants, and even predictive maintenance in industrial AI Monitoring Devices News, where a sensor can listen for the acoustic signature of a failing bearing.

Revolutionizing Industries: Real-World Applications and Impact

The accessibility and power of these integrated development platforms are catalyzing innovation across a vast spectrum of industries. They lower the barrier to entry for creating sophisticated AI-powered products, enabling rapid prototyping and deployment of intelligent solutions.

The Intelligent Home, Office, and City

In our living and working spaces, these kits are the engines behind the latest Smart Home AI News. They can power a new generation of Smart Appliances News, like a refrigerator with a camera that identifies groceries and suggests recipes. In the office, AI Office Devices News reports on smart conferencing systems that can automatically track who is speaking. On a larger scale, these platforms are vital for Smart City / Infrastructure AI Gadgets News, enabling intelligent traffic lights that adapt to real-time conditions or waste bins that signal when they are full. From AI Kitchen Gadgets News to AI Lighting Gadgets News, the potential for smarter, more responsive environments is immense.

Personal Technology and Wellness

The personal device market is being reshaped by on-device AI. The latest AI Pet Tech News features smart feeders that use vision to dispense food only to a specific pet. In wellness, AI Sleep / Wellness Gadgets News highlights devices that use low-power sensors to monitor sleep stages without requiring a bulky, power-hungry main processor. This trend extends to AI in Fashion / Wearable Tech News, with concepts for clothing that can analyze posture, and even AI Toys & Entertainment Gadgets News, where toys can recognize and react to a child’s facial expressions.

Industrial, Agricultural, and Scientific Frontiers

In more demanding environments, these rugged, all-in-one solutions are proving invaluable. They are central to advancements in AI Gardening / Farming Gadgets News, where drones equipped with vision systems can identify pests or assess crop health. For researchers, these kits are accelerating AI Research / Prototypes News and the development of new Neural Interfaces News. In the industrial sector, they enable on-the-fly quality control, with AI Cameras News focusing on systems that can spot microscopic defects on a production line, a task that previously required expensive, specialized equipment.

Developer’s Guide: Best Practices and Recommendations

While these platforms make edge AI more accessible, success is not guaranteed. Developers and organizations must approach projects with a clear strategy, keeping in mind both the opportunities and the challenges.

Choosing the Right Platform

Selecting the right development kit is the first critical step. Consider the entire ecosystem, not just the hardware specs. How good is the documentation? Is there an active community? What software libraries and pre-trained models are provided? The processing power (GOPS/TOPS) of the NPU should match your application’s needs—a simple keyword-spotting model requires far less power than a real-time object detection model. Finally, evaluate the integrated sensors and peripherals. Does the kit have the specific camera, microphone, and connectivity options your project demands?

The Software Challenge: Model Optimization is Key

Getting a trained AI model to run efficiently on an MCU is a significant engineering challenge. This is where AI Tools for Creators News becomes relevant, as new software is constantly emerging to simplify this process. Developers must become familiar with techniques like quantization (reducing model precision from FP32 to INT8) and pruning (removing unnecessary connections in the neural network). Using frameworks like TensorFlow Lite for Microcontrollers or vendor-specific inference engines is essential. Always start with a simple, proven model from the provider’s model zoo before attempting to deploy a complex, custom-built one.

Common Pitfalls to Avoid

A common mistake is underestimating the total system power consumption. While the MCU/NPU may be efficient, peripherals like a large touchscreen or a constantly active Wi-Fi radio can quickly drain a battery. Another pitfall is ignoring the data pipeline. The fastest NPU in the world is useless if the camera can’t feed it frames quickly enough. Finally, don’t overlook the learning curve. While these kits are “all-in-one,” they are still complex systems that require time to master. Budget for learning and experimentation.

Conclusion: A New Era of Ubiquitous Intelligence

The emergence of powerful, integrated, all-in-one AI edge development kits marks a pivotal moment in the evolution of artificial intelligence. By combining high-performance NPUs, advanced sensors, and ultra-low-power co-processors into a single, accessible package, these platforms are breaking down the barriers to innovation. They are empowering a new generation of developers to build smarter, more responsive, and more efficient products that can see, hear, and understand the world around them, all without a constant connection to the cloud. From the smart home to the factory floor, and from wearable wellness devices to autonomous robots, the impact of this technological convergence is just beginning to be felt. This is more than just an incremental update; it is the democratization of edge AI, heralding a future where intelligence is truly all around us.