For decades, technology has been a powerful force for leveling the playing field, offering tools that bridge gaps and open doors for individuals with disabilities. From the simplicity of a screen reader to the complexity of a motorized wheelchair, these innovations have been crucial. However, we are now standing at the precipice of a new, more profound revolution, one driven by the transformative power of Artificial Intelligence. AI is shifting the paradigm from passive assistance to proactive empowerment, creating a future where technology doesn’t just accommodate a disability but actively enhances a person’s ability to interact with the world on their own terms. This evolution is not merely an incremental improvement; it represents a fundamental rethinking of what accessibility means. The latest AI for Accessibility Devices News is filled with breakthroughs that are making technology a true partner in daily life, capable of seeing, hearing, and understanding the world in ways that offer unprecedented levels of independence and inclusion.

The Convergence of AI and Assistive Technology

The journey of assistive technology has been one of steady progress, but the integration of AI marks a significant inflection point. Traditional aids, while invaluable, are often static. A magnifying glass enlarges text, but it cannot read it aloud or summarize its meaning. A hearing aid amplifies sound, but it may struggle to isolate a single voice in a crowded room. AI changes this dynamic by introducing intelligence, context-awareness, and adaptability into the equation. These are not just tools anymore; they are becoming dynamic partners.

From Static Tools to Dynamic Partners

The core difference lies in the ability of AI-powered devices to interpret data and make decisions in real-time. An AI-enabled camera doesn’t just capture an image; it understands the scene, identifies objects, recognizes faces, and translates text. This cognitive layer transforms a simple sensor into a powerful sensory extension for the user. This shift is evident across all categories of assistive tech, creating a more seamless and intuitive user experience. The latest Wearables News, for instance, is increasingly focused on smart devices that not only track health but also provide real-time environmental cues and safety alerts for users with sensory or cognitive disabilities.

Key Areas of AI Impact

The application of AI in accessibility is broad and deep, touching nearly every aspect of daily living. Several key areas are experiencing rapid innovation:

- Sensory Augmentation: For individuals with visual or hearing impairments, AI is a game-changer. As seen in AI-enabled Cameras & Vision News, computer vision algorithms can now describe a user’s surroundings, read documents, and even guide them in taking a perfectly framed photograph. Similarly, the latest AI Audio / Speakers News highlights smart hearing aids and devices that use machine learning to filter out background noise and enhance speech clarity with remarkable precision.

- Enhanced Mobility & Navigation: Independence is often synonymous with the freedom to move. The worlds of Robotics News and Autonomous Vehicles News are converging to create intelligent mobility aids. This includes smart canes that use LiDAR and AI to detect obstacles above ground level, AI-powered navigation apps that provide detailed audio instructions for pedestrians with visual impairments, and advanced wheelchairs that can autonomously navigate complex indoor environments.

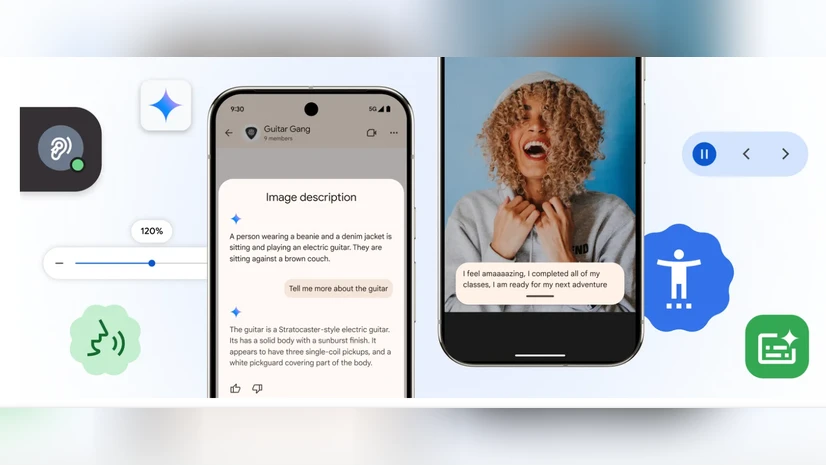

- Communication and Social Interaction: AI is breaking down communication barriers. Real-time speech-to-text transcription helps individuals who are deaf or hard of hearing participate in conversations. Text-to-speech has become incredibly natural and expressive, giving a voice to those who cannot speak. Furthermore, AI Assistants News showcases how voice-activated assistants can help users with dexterity challenges compose emails, make calls, and manage their schedules effortlessly.

- Independent Living: The rise of the intelligent home environment is a major boon for accessibility. According to Smart Home AI News, AI allows for the seamless integration of various devices. A person with limited mobility can use voice commands to control lighting, climate, and security systems. Smart Appliances News reports on ovens and microwaves that can be operated via a smartphone app, providing greater independence in the kitchen.

The Technological Pillars of AI-Powered Accessibility

The remarkable capabilities of modern accessibility devices are built upon a foundation of several core AI technologies. Understanding these pillars is key to appreciating both their current power and future potential. These systems work in concert, creating a sophisticated network of perception, processing, and action that allows a device to intelligently assist its user.

Computer Vision: The Eyes of the Machine

Computer vision is arguably one of the most impactful AI fields for accessibility, particularly for individuals with visual impairments. It grants devices the ability to “see” and interpret the visual world. This involves several complex tasks:

- Object and Scene Recognition: AI models can identify everyday objects, from a set of keys on a table to a specific brand of cereal on a grocery store shelf. More advanced systems can provide a full “scene description,” narrating the environment to the user (e.g., “A park with two people sitting on a bench near a fountain”). The latest AI Cameras News is dominated by these advancements.

- Optical Character Recognition (OCR): Modern OCR goes beyond simple text scanning. It can read text from challenging surfaces like crumpled paper, product labels, or restaurant menus and present it in an accessible format, often reading it aloud.

- Facial Recognition: Devices can be trained to recognize the faces of friends and family, announcing who has just entered the room. This adds a crucial social dimension to interactions.

A prime example of this technology in action can be found in the latest AI Phone & Mobile Devices News. New camera features on smartphones use AI to provide real-time audio and haptic feedback to help a user with low vision position their phone perfectly to capture a selfie or a photo of a document, ensuring the subject is centered and in focus. Similarly, Smart Glasses News frequently covers wearables that use a tiny camera to read signs, menus, and mail to the wearer in real-time.

Natural Language Processing (NLP): The Voice and Ears

If computer vision provides the eyes, Natural Language Processing (NLP) provides the voice and ears, enabling fluid communication between the user and the device. This technology encompasses both understanding human language and generating it.

- Speech Recognition: This allows devices to accurately transcribe spoken words into text. Its application ranges from voice commands for controlling a smart home to real-time captioning of conversations, lectures, and media.

- Text-to-Speech (TTS): Modern TTS engines use deep learning to generate speech that is remarkably human-like, with natural intonation and emotion. This is critical for screen readers and communication devices.

- Natural Language Understanding (NLU): This is the “intelligence” part of an AI assistant. NLU allows a device to understand the intent behind a command, even if it’s phrased conversationally. For example, understanding that “I’m cold” means it should turn up the thermostat. The progress in AI Companion Devices News is largely driven by improvements in NLU.

Machine Learning and Edge Computing: The Brains

Machine learning (ML) is the engine that powers these systems, allowing them to learn from vast amounts of data without being explicitly programmed. An ML model for fall detection, for example, is trained on data from thousands of hours of movement to recognize the specific patterns associated with a fall. The latest AI Sensors & IoT News highlights how these ML models are being embedded into smaller and more efficient sensors.

Crucially, the trend is moving towards on-device processing, also known as edge computing. As covered in AI Edge Devices News, performing AI calculations directly on the device rather than sending data to the cloud has two major benefits for accessibility:

- Privacy: Sensitive data, such as images of a user’s home or private conversations, never leaves the device.

- Speed: Real-time applications, like obstacle avoidance for a smart cane, cannot tolerate the latency of a round-trip to the cloud. Edge AI provides the instantaneous response needed for safety and usability.

From Concept to Reality: AI Accessibility in Action

The theoretical promise of AI is being realized in a growing number of practical, life-changing products and services. These tools are no longer confined to research labs; they are in the hands of users, fostering independence and enriching lives every day. The breadth of innovation is staggering, with dedicated solutions emerging for a wide spectrum of needs.

For Visual Impairments

Beyond the built-in features on smartphones, dedicated devices offer powerful, specialized support. Wearable cameras like the OrCam MyEye can clip onto a pair of glasses and, with a simple gesture, read text from any surface, recognize faces, and identify products. Mobile apps like Microsoft’s Seeing AI turn a standard smartphone into an all-in-one “talking camera” that can narrate the world. The future points towards even more immersive solutions, with AR/VR AI Gadgets News detailing prototypes of smart glasses that can highlight obstacles or magnify text directly in a user’s field of view.

For Hearing Impairments

Real-time captioning has become a standard feature on many platforms, transcribing everything from phone calls to video conferences. More advanced applications can identify non-speech sounds, providing visual alerts on a phone or wearable for a doorbell, a crying baby, or a smoke alarm. The latest AI Audio / Speakers News also covers hearing aids that use AI to create personalized hearing profiles, adapting in real-time to different acoustic environments, such as a quiet library versus a noisy restaurant, to provide optimal clarity.

For Mobility and Dexterity Challenges

The smart home is a powerful accessibility ecosystem. A user with quadriplegia can control their entire environment—from AI Lighting Gadgets News about smart bulbs to AI Security Gadgets News on smart locks—using only their voice or a sip-and-puff switch. In the realm of personal mobility, AI Personal Robots are evolving from simple cleaning devices (as seen in Robotics Vacuum News) to sophisticated assistants that can fetch items or provide physical support. The field of Health & BioAI Gadgets News also points to AI-powered exoskeletons that can help individuals with paralysis to stand and walk.

For Cognitive and Learning Disabilities

AI’s ability to process information and present it in simplified, structured ways is a powerful tool for individuals with cognitive disabilities like autism, dyslexia, or dementia. AI Education Gadgets News highlights tools that can summarize complex articles into easy-to-read bullet points or adapt learning materials to an individual’s comprehension level. AI assistants can provide verbal reminders for medication or daily tasks, reducing cognitive load and anxiety. Some AI Toys & Entertainment Gadgets News even feature social robots that use AI to help children with autism practice social cues and conversational skills in a safe, non-judgmental environment.

Navigating the Future: Opportunities and Obstacles

While the potential of AI in accessibility is immense, the path forward is not without its challenges. Realizing this potential requires a concerted effort from developers, policymakers, and users to navigate the ethical and practical hurdles. Thoughtful design and a commitment to inclusivity are paramount to ensuring these powerful technologies benefit everyone.

Common Pitfalls and Ethical Considerations

- Bias in AI: AI models are only as good as the data they are trained on. If a facial recognition system is trained primarily on data from one demographic, it may perform poorly for others, leading to exclusion and frustration.

- Privacy Concerns: Many accessibility devices, by their nature, must collect sensitive personal data. It is crucial to address how this data is stored, used, and protected. The push towards AI Edge Devices News is a direct response to these concerns, as on-device processing minimizes data exposure.

- The Digital Divide: The most advanced technologies often come with a high price tag, putting them out of reach for many who need them most. Furthermore, a reliable internet connection is often required, creating another barrier in underserved areas.

- Over-reliance and Autonomy: There is a fine line between empowering a user and creating dependency. Devices should be designed to augment a user’s skills and judgment, not replace them entirely.

Best Practices for Development

To avoid these pitfalls, developers in the accessibility space should adhere to a set of core principles:

- Inclusive Design (“Nothing About Us Without Us”): The most important principle is to involve people with disabilities throughout the entire development process, from initial concept to final testing. Their lived experiences provide invaluable insights that cannot be replicated in a lab.

- Focus on Data Diversity: Actively seek out and use training data that is representative of all potential users, across different races, genders, ages, and types of disability.

- Prioritize User Control and Transparency: Users must have clear, simple controls over their data and a transparent understanding of how the device works and what information it collects.

The Next Wave of Innovation

Looking ahead, the fusion of AI with other emerging technologies promises even more profound advancements. The latest AI Research / Prototypes News offers a glimpse into this future. We can anticipate breakthroughs in areas like Neural Interfaces News, where brain-computer interfaces could allow users to control devices with their thoughts. We will see more sophisticated AI Personal Robots that can provide complex physical and social assistance. The insights from AI Monitoring Devices News will lead to predictive health alerts, while developments in AI in Sports Gadgets News will create more adaptive and inclusive recreational opportunities. The ultimate goal is to create a world where technology is so seamlessly integrated and personalized that the concept of a “disability” becomes increasingly irrelevant.

Conclusion

Artificial Intelligence is fundamentally reshaping the landscape of accessibility, catalyzing a move from static aids to intelligent, proactive partners. By leveraging the power of computer vision, natural language processing, and machine learning, developers are creating tools that offer unprecedented levels of independence, connection, and opportunity. From smart glasses that read the world aloud to AI assistants that manage a connected home, these innovations are breaking down long-standing barriers. However, to fully realize this promise, the industry must proceed with a deep commitment to ethical principles, prioritizing inclusive design, user privacy, and equitable access. The future is not just about creating smarter devices; it’s about using that intelligence to build a more accessible and equitable world for everyone.