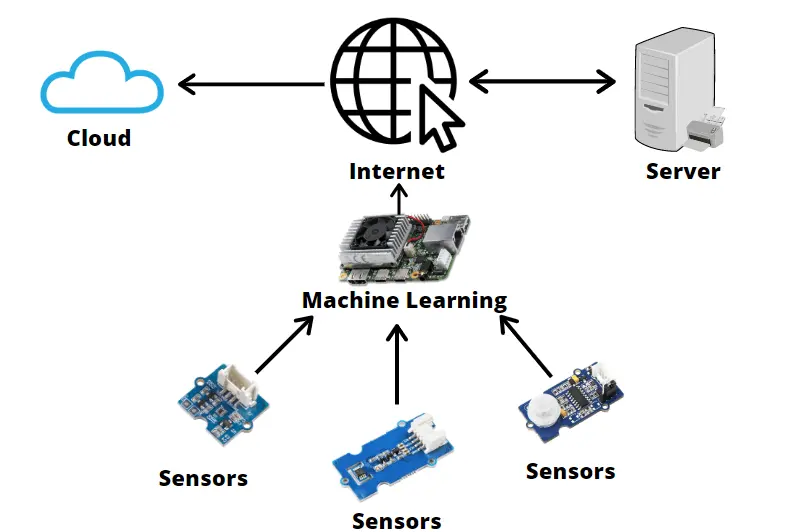

The relentless march of artificial intelligence from the cloud to the edge is one of the most significant technological shifts of our time. From the smartphone in your pocket to the autonomous vehicle on the horizon, devices are becoming increasingly intelligent, processing vast amounts of data locally to make decisions in real-time. This explosion in on-device processing has created a new and critical bottleneck: memory. As AI models become more complex, the demand for high-bandwidth, low-power memory solutions has skyrocketed, pushing traditional architectures to their limits. The latest AI Edge Devices News reveals a paradigm shift is underway, moving beyond conventional memory to embrace innovative, customized 3D-stacked solutions that promise to redefine the performance and capabilities of the next generation of smart devices.

For years, the conversation around high-performance memory has been dominated by High Bandwidth Memory (HBM), the powerhouse behind data center GPUs and AI accelerators. However, HBM’s high cost, power consumption, and thermal complexity make it unsuitable for the vast majority of resource-constrained edge applications. This has created a critical gap in the market—a need for a solution that offers a significant leap in performance over standard mobile DRAM (like LPDDR5) without the prohibitive overhead of HBM. Now, leading memory manufacturers are answering the call with a new class of customized memory that directly integrates with the processor, heralding a new era for everything from AI-enabled Cameras & Vision News to advanced Robotics News.

The Memory Conundrum: Why Edge AI Demands a New Approach

At the heart of every edge AI device is a System-on-Chip (SoC) that must perform complex calculations with extreme efficiency. The challenge lies in feeding the processing cores with data fast enough to keep them from starving. This is often referred to as the “memory wall”—a scenario where the processor is capable of performing calculations much faster than the memory system can supply the necessary data. In the context of edge AI, this problem is exacerbated by three key constraints: power, space, and cost.

Limitations of Current Memory Technologies

To understand the significance of the new memory architectures, it’s essential to look at the trade-offs of existing solutions:

- LPDDR (Low-Power Double Data Rate): The current standard for most mobile and edge devices, LPDDR offers a good balance of performance, power, and cost. However, as AI workloads intensify, even the latest LPDDR5/5X standards can struggle to provide the sheer bandwidth required for real-time processing of high-resolution video streams or complex sensor fusion data. The physical distance between the SoC and separate DRAM chips on the circuit board introduces latency and increases power consumption for data transfer.

- SRAM (Static RAM): Integrated directly onto the processor die, SRAM is incredibly fast and offers very low latency. It’s used for CPU caches and other critical on-chip memory. The downside is that SRAM is very “dense” in terms of transistor count, making it extremely expensive and space-intensive. It’s simply not feasible to build the gigabytes of memory required for modern AI applications using only SRAM.

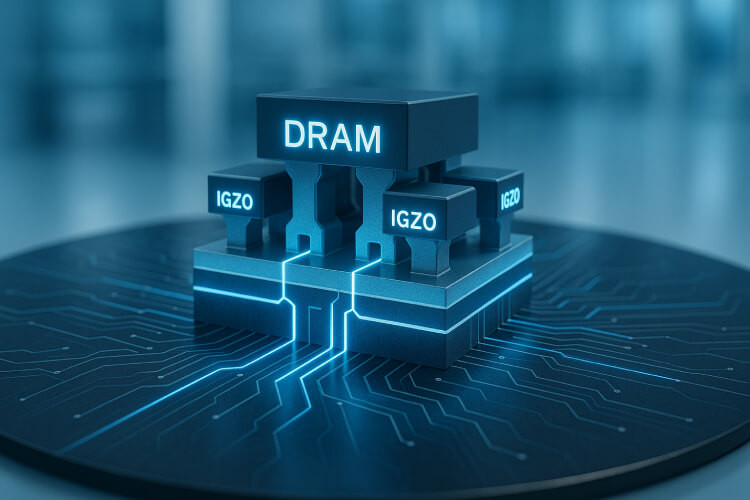

- HBM (High Bandwidth Memory): HBM solves the bandwidth problem by stacking multiple DRAM dies vertically and connecting them to the processor through a wide interface on a silicon interposer. This provides enormous bandwidth but comes at a significant cost in termsal of manufacturing complexity, power draw, and thermal management, making it overkill and impractical for most edge devices.

This leaves a massive gap for applications that need more bandwidth than LPDDR can offer but cannot justify the cost and power of HBM. This is precisely the gap that new, customized 3D-stacked DRAM solutions are designed to fill, impacting everything from AI Phone & Mobile Devices News to the latest in Autonomous Vehicles News.

A New Architecture Emerges: Customized 3D-Stacked DRAM

In response to the market’s needs, a new category of memory is gaining traction, often referred to as “customized ultra-bandwidth memory” or simply “near-memory” solutions. These architectures borrow principles from HBM—namely, stacking and tight integration—but are purpose-built and scaled for the unique constraints of the edge. Instead of a one-size-fits-all approach, these solutions offer a tailored balance of performance, power, and form factor.

Core Principles of the New Architecture

These innovative memory solutions are built on the concept of shortening the distance data must travel between the memory and the processor. By stacking DRAM dies directly on top of or adjacent to the logic die (the SoC) within a single package, they achieve several key advantages:

- Extreme Bandwidth: By replacing long traces on a printed circuit board with microscopic vertical connections (like Through-Silicon Vias or TSVs), the data interface can be made much wider. This allows for a massive increase in parallel data transfer, resulting in bandwidth that can be several times higher than the best LPDDR solutions, often reaching into the hundreds of gigabytes per second.

- Unprecedented Power Efficiency: Physics dictates that the energy required to drive a signal is proportional to the distance it travels. By reducing the data path from centimeters on a board to micrometers within a package, the power required for memory access is slashed dramatically. This is a game-changer for battery-powered devices, a key topic in Wearables News and AI Companion Devices News.

- Compact Form Factor: Stacking components vertically saves precious board space. This integration is critical for creating smaller, more powerful devices, from next-generation Smart Glasses News to discreet AI Security Gadgets News.

- Tailored Customization: Unlike standardized memory modules, these solutions are often co-designed with the SoC. This allows device manufacturers to specify the exact memory capacity, bandwidth, and latency characteristics required for their specific application, optimizing for performance and cost.

This approach effectively creates a powerful, efficient, and compact “system-in-package” (SiP) that is highly optimized for AI workloads. It represents a fundamental shift from buying off-the-shelf components to co-designing integrated solutions, a trend highlighted in recent AI Research / Prototypes News.

Real-World Impact: Powering the Next Wave of Intelligent Devices

The transition to these advanced memory architectures is not just an incremental improvement; it’s an enabling technology that will unlock new capabilities across a vast array of edge AI applications. The ability to process more data faster and with less power will have a profound impact on numerous sectors.

Smart Vision and Autonomous Systems

High-resolution cameras and LiDAR sensors generate enormous streams of data that must be processed in real-time. In applications like advanced driver-assistance systems (ADAS), drones, and robotics, low latency is a matter of safety and functionality.

- Case Study: AI-Powered Security Cameras: A modern security camera needs to do more than just record video. With high-bandwidth on-chip memory, it can run multiple complex AI models simultaneously—performing object detection, facial recognition, license plate reading, and anomaly detection directly on the device. This reduces reliance on the cloud, lowers latency for alerts, and enhances privacy. This is a recurring theme in AI Monitoring Devices News.

- Application: Robotics and Drones: For a warehouse robot or an agricultural drone, high-bandwidth memory is crucial for sensor fusion—combining data from cameras, LiDAR, and IMUs to build a coherent model of the world and navigate it safely. This is central to the latest Drones & AI News and for specialized devices like Robotics Vacuum News.

Personal Computing and Wearable Technology

For devices we wear or carry, power efficiency and form factor are paramount. This new memory technology allows for unprecedented intelligence in compact packages.

- Scenario: Next-Generation Smart Glasses: Future AR/VR devices will need to perform real-time environment mapping, object recognition, and gesture tracking to create immersive experiences. High-bandwidth, low-power memory is essential to perform these tasks on-device without draining the battery in minutes or requiring a bulky design, a hot topic in AR/VR AI Gadgets News.

- Application: Health and Wellness Gadgets: As covered in Health & BioAI Gadgets News, wearables are evolving from simple trackers to sophisticated health monitors. A device with advanced on-chip memory could continuously analyze ECG and PPG signals using a complex neural network to detect arrhythmias or other health issues in real-time, providing immediate feedback. This also applies to the latest in AI Fitness Devices News and AI Sleep / Wellness Gadgets News.

Smart Home, Office, and Entertainment

The intelligence in our living and working spaces is becoming more distributed. From smart speakers to kitchen gadgets, on-device processing enhances responsiveness and privacy.

- Example: Advanced AI Assistants: The latest AI Assistants News points towards more natural and complex on-device conversations. High-bandwidth memory allows a smart speaker or display to run a much larger language model locally, enabling faster response times and the ability to function without a constant internet connection. This is also relevant for AI Audio / Speakers News.

- Innovation in Entertainment: For gaming and creative tools, this technology can enable new possibilities. Imagine AI Toys & Entertainment Gadgets News featuring toys that can see, understand, and react to a child’s play in real-time, or AI Tools for Creators News discussing portable devices that can perform complex video rendering on the fly. This also extends to AI in Gaming Gadgets News, where on-device AI can create more dynamic and responsive non-player characters (NPCs).

Navigating the New Memory Landscape: Pros, Cons, and Recommendations

While the potential of customized 3D-stacked DRAM is immense, adopting this new technology requires careful consideration. It represents a significant departure from traditional design methodologies, and engineers must weigh the benefits against the challenges.

Advantages vs. Disadvantages

Pros:

- Massive Performance Uplift: The leap in bandwidth and reduction in latency directly translates to higher AI model performance and the ability to run more complex algorithms.

- Superior Power Efficiency: The energy savings are substantial, enabling longer battery life or more powerful processing within the same thermal envelope.

- Reduced Form Factor: Integrating memory and logic into a single package saves significant space, allowing for smaller and sleeker device designs.

Cons:

- Higher Initial Cost: The advanced packaging and co-design process make these solutions more expensive than off-the-shelf LPDDR components, at least initially.

- Thermal Management Challenges: Stacking active silicon dies on top of each other concentrates heat. This necessitates more sophisticated thermal design and heat dissipation strategies.

- Design and Supply Chain Complexity: This is not a drop-in replacement. It requires deep collaboration between SoC designers, memory manufacturers, and packaging houses, potentially leading to vendor lock-in.

Tips and Best Practices for Implementation

For product designers and engineers looking to leverage this technology, a strategic approach is key:

- Identify the Bottleneck: Before committing to a costly new architecture, thoroughly analyze your application. Is memory bandwidth truly the limiting factor? For many simpler IoT devices covered in AI Sensors & IoT News, a high-end LPDDR solution may still be sufficient.

- Engage in Early-Stage Co-Design: The choice of memory is no longer a late-stage decision. It must be a foundational part of the SoC architecture. Engage with memory partners early in the design cycle to define requirements and explore custom solutions.

- Prioritize Thermal Modeling: From day one, invest in advanced thermal simulation and modeling. The performance gains from 3D stacking can be completely negated if the chip is forced to throttle due to excessive heat.

- Consider the Entire System Cost: While the component cost may be higher, evaluate the total cost of ownership. A smaller PCB, a simpler power delivery network, or the ability to eliminate other components might offset the initial investment.

Conclusion: A New Foundation for Edge Intelligence

The emergence of customized 3D-stacked DRAM solutions marks a pivotal moment in the evolution of edge AI. It signals a move away from standardized, general-purpose components toward highly integrated, application-specific systems. This memory revolution is breaking down the “memory wall,” directly addressing the critical bottlenecks of bandwidth, power, and size that have constrained the development of truly intelligent devices. By providing a “just right” solution between LPDDR and HBM, this technology will serve as the foundation for the next generation of AI-powered products.

From smarter cameras and more autonomous robots to truly personal AI companions and wearables, the impact will be felt across every industry. As this technology matures and becomes more accessible, it will accelerate the distribution of intelligence, making our devices more capable, responsive, and seamlessly integrated into our lives. The key takeaway for developers, engineers, and tech enthusiasts is clear: the future of AI at the edge is not just about faster processors; it’s about smarter, more integrated memory.