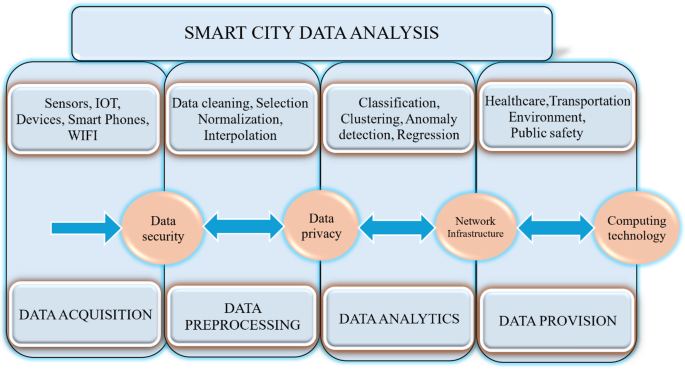

I have a love-hate relationship with the concept of “smart cities.” On paper, it’s brilliant. We cover everything in sensors—LiDAR on light poles, cameras at intersections, environmental sniffers on park benches—and suddenly we have a digital twin of the urban environment. Traffic flows better, energy is saved, and we all live in a utopia of efficiency.

In reality? It’s a maintenance nightmare.

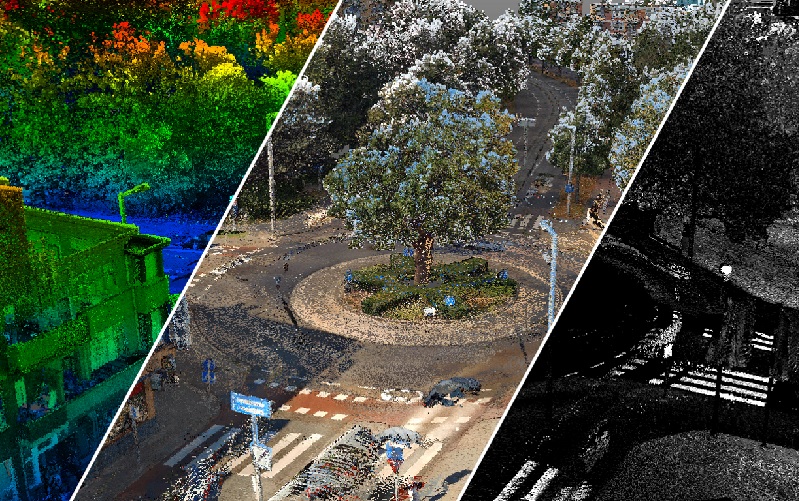

If you’ve ever tried to keep a network of 3D sensors calibrated in an outdoor environment, you know exactly what I’m talking about. The wind blows, a truck rumbles by, or the sun heats up a metal mounting bracket, and suddenly your precise point cloud is tilted three degrees to the left. Your “smart” intersection now thinks a pedestrian is standing in the middle of traffic when they’re actually safely on the sidewalk.

For the last few years, the solution has been painfully analog: send a guy in a bucket truck to manually recalibrate the thing. It’s expensive, it’s slow, and honestly, it’s stupid.

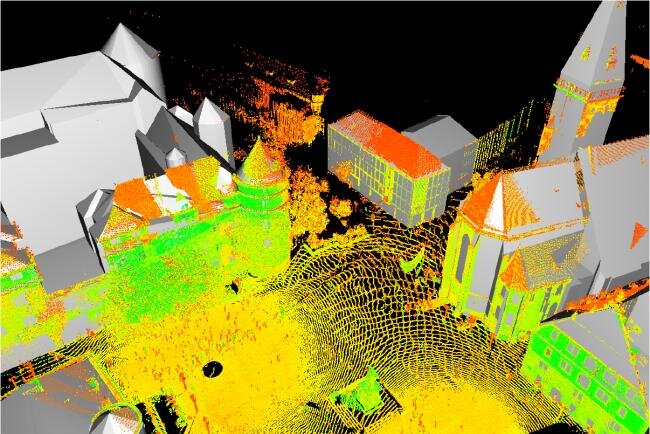

That’s why I was digging through some new research released this week about a method for automated 3D sensor network monitoring, and for the first time in a while, I’m actually optimistic. It looks like we might finally have a way to automate the health checks of these massive sensor arrays without breaking the bank.

The Drift Problem

Here’s the thing about 3D sensors, specifically LiDAR and depth cameras: they are incredibly sensitive to extrinsic calibration errors. That’s just a fancy way of saying “where the sensor is positioned relative to the world.”

In a controlled factory setting, this is easy. You bolt the sensor down, calibrate it once, and it stays there until the heat death of the universe. But stick that same sensor on a pole overlooking a busy highway? Good luck. Thermal expansion alone can shift the alignment enough to ruin your data fusion algorithms.

Until now, spotting these errors required either:

- Scheduled manual checks (expensive).

- Wait for the data to get so bad that someone complains (risky).

- Placing dedicated calibration targets all over the city (ugly and impractical).

The new method researchers are talking about flips this. Instead of relying on external targets or manual checks, it uses the environment itself and a clever application of deep learning to monitor the network’s integrity.

Using the Chaos as a Calibration Target

The core idea is pretty slick. It treats the chaotic, moving city environment not as noise, but as the signal for calibration.

The system uses a Deep Learning framework to analyze the geometric relationships between different sensors in the network. If Sensor A sees a bus turn a corner, and Sensor B sees the same bus but shifted by half a meter or rotated slightly, the model flags the discrepancy. It doesn’t just say “error”—it quantifies exactly how the 3D alignment has shifted.

It’s basically doing continuous, real-time cross-validation. If five sensors agree on the geometry of the intersection and one is an outlier, the system identifies the drift immediately. No bucket trucks required.

I find this approach fascinating because it solves the scalability issue. You can’t scale manual maintenance to 10,000 sensors. But you can scale a software agent that runs in the background, constantly checking the math.

Why I’m Cautiously Optimistic (But Still Skeptical)

I’ve seen a lot of “self-healing network” claims since 2023 that turned out to be vaporware. Usually, the catch is computational load. Running heavy 3D point cloud analysis requires serious GPU grunt. If this new monitoring method demands a rack of H100s just to tell me a camera is crooked, it’s dead on arrival.

However, the initial specs suggest this is lightweight enough to run on edge devices or at least on a local gateway. That’s the key. If we can push this logic down to the edge, we turn “dumb” sensor networks into systems that actually know when they’re sick.

Another potential snag is dynamic environments. Cities aren’t static. Construction happens. Temporary barriers go up. A snowstorm changes the geometry of the road. I’m curious to see how this model distinguishes between “my sensor has physically moved” and “the world has changed.” The research claims it’s robust against these environmental changes, but I’ll believe it when I see it running in Boston during February.

The Boring Stuff Is the Most Important

We spend so much time hyping up autonomous taxis and drone deliveries, but we ignore the plumbing. Sensor calibration is the plumbing of the smart city. If the pipes are leaking, it doesn’t matter how fancy the faucet is.

This development feels different because it addresses the operational expenditure (OpEx) that kills these projects. I’ve consulted on municipal IoT rollouts where the hardware budget was approved instantly, but the maintenance budget was slashed. Two years later, half the sensors are offline or spewing garbage data because nobody has the cash to recalibrate them.

If we can automate the monitoring process using this kind of AI-driven geometric analysis, we stop bleeding money on maintenance. That means the sensors stay accurate longer, the data remains trustworthy, and maybe—just maybe—the smart city actually works.

I’m going to keep an eye on this. If the code drops or we see a commercial implementation later this year, I’ll be the first to test it. Until then, check your mounting brackets.