The landscape of digital content creation is undergoing a seismic shift, moving rapidly from an era of manual editing to one of generative synthesis. For years, the barrier to entry for high-quality video production was defined by expensive hardware, complex software, and steep learning curves. However, the latest developments in AI Tools for Creators News suggest a fundamental democratization of these capabilities. With the integration of advanced generative models like Google’s Veo into mainstream platforms like YouTube, the power to conjure cinema-quality visuals from simple text prompts is now resting in the pockets of millions of users.

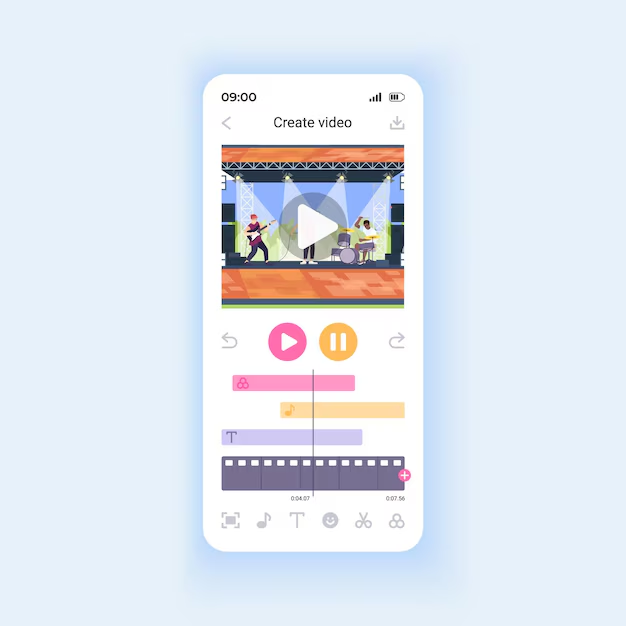

This transition is not merely about adding filters or automated captions; it is about the complete synthesis of video and audio content. Creators can now generate six-second high-definition clips for Shorts, complete with ambient sound, solely through natural language descriptions. This capability fundamentally alters the workflow of the creator economy, bridging the gap between imagination and execution. As we explore this technological leap, we must also consider the broader ecosystem of hardware and software—from AI Phone & Mobile Devices News to Smart Glasses News—that supports this new wave of creativity.

Section 1: The Veo Integration and the Mechanics of Generative Video

The core of this recent advancement lies in the deployment of Veo, a state-of-the-art generative video model, directly into mobile applications. Unlike previous iterations of video AI that struggled with temporal consistency or resolution, Veo represents a significant leap forward in understanding physics, lighting, and cinematic movement.

From Text to Pixels: How It Works

The integration allows users to utilize a feature often referred to as “Dream Screen.” By inputting a text prompt—such as “a cinematic drone shot of a cyberpunk city in rain”—the AI processes the semantic meaning and generates a video clip. Technically, this involves complex diffusion models that denoise random static into coherent frames, maintaining temporal stability so objects don’t morph or flicker unnaturally. This is a massive development for those following AI Research / Prototypes News, as it marks the transition of research-grade tech into consumer-grade utility.

Audio Synthesis and Multimodal Generation

Visuals are only half the equation. The latest tools do not generate silent movies; they synthesize audio simultaneously. If the video depicts a roaring lion or a bustling cafe, the AI generates the corresponding soundscape. This multimodal approach reduces the reliance on stock audio libraries and ensures that the sound design perfectly matches the generated visual cues. For creators, this eliminates the friction of sourcing distinct audio tracks, streamlining the production of short-form content.

The Mobile-First Ecosystem

The decision to deploy these tools on mobile platforms first is strategic. It acknowledges that the modern creator’s studio is their smartphone. This shift impacts the entire mobile industry, driving AI Phone & Mobile Devices News as manufacturers race to include dedicated Neural Processing Units (NPUs) capable of handling on-device inference or optimizing cloud-based generation requests. The smartphone is no longer just a camera; it is a director, editor, and VFX studio combined.

Section 2: The Hardware Ecosystem Supporting AI Creators

While software like Veo grabs the headlines, the generative revolution is deeply intertwined with physical hardware. A creator’s workflow is supported by a web of connected devices, and staying abreast of gadget news is essential for leveraging these AI tools effectively.

Capture and Augmentation: Cameras and Drones

Generative AI often works best when augmenting real footage. Creators utilizing AI-enabled Cameras & Vision News are finding cameras that can capture depth data, allowing AI to insert generated backgrounds behind real subjects more accurately. Similarly, Drones & AI News highlights how autonomous aerial vehicles can capture complex tracking shots that are then enhanced or stylistically altered by generative models like Veo. The synergy between a physical drone shot and an AI style transfer creates a hybrid aesthetic that is becoming increasingly popular.

The Smart Studio Environment

For creators filming at home, the environment is controlled by intelligent systems. Smart Home AI News and AI Lighting Gadgets News are critical here. Imagine a setup where your smart lights automatically adjust their color temperature to match the “golden hour” aesthetic of an AI-generated background you intend to use. Furthermore, AI Audio / Speakers News reveals advancements in smart speakers that can act as monitoring devices, analyzing room acoustics to suggest the best microphone placement for voiceovers.

Wearables and POV Content

The rise of Smart Glasses News and AR/VR AI Gadgets News introduces a new perspective: Point of View (POV) content. Creators wearing AI-enabled glasses can capture their daily lives hands-free. These raw feeds can then be processed by generative tools to add fantastical elements—turning a walk in the park into a journey through a Martian landscape. This intersection of AI in Fashion / Wearable Tech News and video generation blurs the line between reality and digital art.

Robotics and Automation in Creation

Even robotics plays a role. AI Personal Robots News and Robotics Vacuum News might seem unrelated, but a clean, organized studio is vital for creators. Automated robots maintain the workspace, while more advanced AI Companion Devices News can actually serve as robotic camera operators, tracking the creator around the room using computer vision, ensuring they are always in frame before the footage is sent to the generative editor.

Section 3: Practical Applications and Workflow Scenarios

Understanding the tech is one thing; applying it to a workflow is another. Here is how different types of creators can leverage these new capabilities, incorporating various niche technologies.

Scenario A: The Travel Vlogger

A travel creator is stuck in a hotel room due to bad weather. They cannot film the city. Using Veo-integrated tools, they can generate B-roll of the city under different weather conditions to narrate their experience. They might use AI for Travel Gadgets News apps to plan their itinerary, then visualize that itinerary using generative video to show their audience where they intended to go. This saves the content schedule from being derailed by reality.

Scenario B: The Tech Reviewer

A reviewer discussing AI Sensors & IoT News or AI Edge Devices News needs to visualize data flow. Instead of hiring an animator, they type “data streams flowing between smart sensors in a futuristic server room” into the generator. The AI creates a perfect background visual for their talking head segment. If they are reviewing AI Kitchen Gadgets News or Smart Appliances News, they can generate fantastical sequences of what the “kitchen of the future” might look like to contrast with current tech.

Scenario C: The Wellness Influencer

Creators in the health space, covering AI Fitness Devices News or AI Sleep / Wellness Gadgets News, often deal with abstract concepts like “mental clarity” or “REM cycles.” Generative video excels at visualizing the abstract. A prompt like “calm blue waves representing deep sleep cycles” creates an instant, copyright-free visual aid. Furthermore, they might use Health & BioAI Gadgets News to track their own stress levels during editing, using biofeedback to optimize their work hours.

Scenario D: The Gamer and Streamer

Those following AI in Gaming Gadgets News know that immersion is key. Streamers can use generative tools to create dynamic “starting soon” screens that change every day based on the game they are playing. If they are playing a racing game, they generate a pit-stop loop. If they are discussing AI Toys & Entertainment Gadgets News, they can generate videos of the toys coming to life, adding a layer of storytelling to a standard review.

Section 4: Implications, Ethics, and the Future of Work

The introduction of powerful generative tools into free, accessible platforms brings profound implications for the creator economy and society at large.

The Democratization of High-End VFX

Historically, high-quality VFX was the domain of Hollywood. Now, a teenager with a smartphone can produce visuals that rival professional studios. This levels the playing field but also saturates the market. Success will no longer depend on access to tools, but on the creativity of the idea. This shifts the value from technical skill (how to use After Effects) to conceptual skill (how to craft a prompt). This is relevant to AI Education Gadgets News, as curriculums will need to shift toward teaching prompt engineering and narrative structure rather than just button-pushing.

The Risk of Homogenization and Deepfakes

With millions using the same models, there is a risk of visual homogenization—a distinct “AI look.” Furthermore, AI Security Gadgets News becomes increasingly relevant as the potential for deepfakes rises. If a creator can generate a video of a person doing something they never did, verification becomes difficult. Platforms will need to implement AI Monitoring Devices News protocols—digital watermarking and content credentials—to distinguish between captured reality and generated fiction.

Sustainability and Energy

Generative AI is computationally expensive. As we discuss AI for Energy / Utilities Gadgets News and Smart City / Infrastructure AI Gadgets News, we must acknowledge the data center load required to render millions of AI videos. Future innovations may focus on AI for Accessibility Devices News that are low-power or edge-computing solutions that reduce the carbon footprint of digital creation.

New Frontiers: Neural Interfaces

Looking further ahead, Neural Interfaces News suggests a future where we won’t even need to type prompts. We might simply think of a scene, and the AI will visualize it. Combined with AI Assistants News, the creative process could become a real-time dialogue between the human brain and the generative engine, bypassing the keyboard entirely.

Conclusion

The integration of Veo and similar advanced models into YouTube and other platforms marks a pivotal moment in AI Tools for Creators News. It signifies the transition of generative video from a novelty to a utility. By combining these software advancements with a robust hardware ecosystem—ranging from AI Office Devices News for productivity to Autonomous Vehicles News for capturing on-the-move content—creators are equipped with an unprecedented arsenal of tools.

However, the tool is only as good as the artisan. Whether you are covering AI Gardening / Farming Gadgets News or AI in Sports Gadgets News, the fundamental requirement remains the same: a compelling human story. The AI can generate the pixels, but it cannot generate the soul of the content. As we move forward, the most successful creators will be those who view these AI tools not as replacements for creativity, but as powerful amplifiers of their unique human perspective.