I have a box in my closet. It’s full of “AI education” gadgets from the last five years. Robot dogs that don’t walk, smart cameras that aren’t smart, and a dozen development boards that promised to teach neural networks but really just taught me how to copy-paste Python scripts. Most of them are e-waste.

So when the TensorKit X1 dropped last Tuesday, I didn’t rush to buy it. I waited. I watched the forums. Then I saw a thread on the Embedded ML discord where a user named byte_masher claimed the board’s new “Architectural Oracle” feature actually worked.

That caught my attention.

If you haven’t been following the ed-tech hardware space since late 2025, you probably missed the shift. We aren’t just teaching kids to classify cats and dogs anymore. We’re trying to teach them engineering. And the hardest part of AI engineering isn’t writing code—it’s predicting whether your architecture will actually scale before you waste three days training it.

The Problem with Classroom Compute

Here’s the reality of teaching AI in 2026: students don’t have H100 clusters. They have four-year-old laptops or maybe a shared cloud instance that times out if they go for lunch.

Until now, the workflow was brutal:

- Student designs a tiny network.

- Student trains it for 4 hours.

- Student finds out it’s garbage.

- Repeat until semester ends.

This is where the X1 does something weird. It doesn’t just train. It predicts.

Hands-on with the “Oracle”

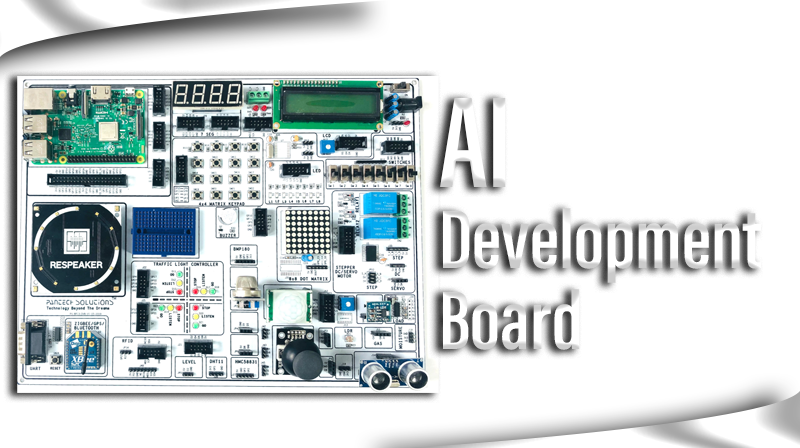

I finally caved and ordered one. It arrived yesterday. The hardware is standard fare for 2026—a custom RISC-V accelerator, 8GB of LPDDR5, and a decent camera sensor. Nothing to write home about.

But the software stack includes a module called tk_predict.

I decided to stress-test it. I fired up VS Code (running the latest Python 3.13 extension) and defined a deliberately messy Convolutional Neural Network. Too many layers, weird stride sizes, vanishing gradients waiting to happen. A classic rookie mistake architecture.

Instead of hitting train(), I ran the prediction profiling tool.

The output:

> Analyzing Architecture...

> Est. Accuracy on CIFAR-10: 42.5% (±3%)

> Scaling Warning: Doubling parameters will yield <1% gain.

> Bottleneck detected at Layer 4 (Conv2D).

It told me the model was doomed in 12 seconds.

I didn’t believe it. I’m stubborn. So I trained the thing anyway. I let it run overnight on my desktop rig with an RTX 5070. Seven hours later? The validation accuracy plateaued at 44.1%.

The little $80 dev board was right. It predicted the scaling limit of my neural network without ever seeing a gradient update.

Why This Matters (It’s Not About the Gadget)

We’ve known about neural scaling laws for years—since that seminal OpenAI paper back in 2020. But it’s always been high-level theory for researchers. Bringing this down to a high school or undergrad level is… well, it’s aggressive.

The X1 is using a lightweight proxy model to estimate the “learnability” of a student’s architecture. It’s teaching them the physics of AI, not just the syntax.

I tried a second test. I took a standard MobileNetV4 architecture (the nano variant) and stripped out half the depthwise separable convolutions to see if the tool would catch the capacity drop.

Result: It predicted a 12% drop in accuracy. The actual drop after training was 14.5%.

Is it perfect? No. When I tried a transformer-based vision model, the prediction was way off—it underestimated the latency by about 30ms. The documentation admits that attention mechanisms are still “experimental” in the profiler. Typical.

The Hardware Itself

I should probably talk about the board, though honestly, the plastic case feels cheap. It’s got that “3D printed prototype” texture that screams startup running out of cash.

- Processor: Dual-core RISC-V @ 2.4GHz

- NPU: 6 TOPS (Int8)

- IO: USB-C, MIPI CSI, 40-pin GPIO (mostly Pi compatible)

I hooked it up to a battery pack and ran a pre-trained gesture recognition demo. It idled at 1.2W and peaked at 4.5W. Efficient, sure, but the heat sink gets uncomfortably hot. If you’re handing this to a 14-year-old, maybe tell them not to touch the metal bit.

A Shift in Curriculum?

My friend Sarah teaches CS at a local community college. I showed her the scaling prediction tool over coffee this morning.

“This ruins my grading rubric,” she said, laughing. “I usually grade them on their debugging process when the model fails. If the tool tells them it’s going to fail beforehand, what do I grade?”

She’s joking (mostly), but she has a point. Tools like this shift the focus from “getting code to run” to “designing systems that work.” It forces students to think about parameters, data efficiency, and architectural bottlenecks before they commit compute resources.

It’s the difference between a cook and a chef. One follows a recipe; the other understands why the ingredients react that way.

The Verdict

Is the TensorKit X1 going to replace the Raspberry Pi 6 in classrooms? Probably not. The ecosystem just isn’t there yet. I spent an hour fighting with a driver issue just to get the camera recognized on Ubuntu 24.04.

But the software approach? That tk_predict function? That is the future.

If you’re an educator or just a nerd like me who likes optimizing neural nets on the weekend, it’s worth the price of admission just to play with the scaling predictor. It’s a glimpse into a world where we stop treating AI training like a slot machine and start treating it like engineering.

Just maybe wait for the second batch. The heat sink on this thing is seriously questionable.