The Post-It Note That Broke the Robot

I spent last Tuesday evening trying to outsmart a plastic robot. Specifically, the new OttoMinds V3 that hit shelves in late January. It’s supposed to be the “ultimate homework companion,” using computer vision to read flashcards and help kids with basic arithmetic. It’s cute. It blinks. And it costs way too much.

But I broke it. With a sticker. I printed a specifically noisy pattern—just a weird, psychedelic square of static—and taped it to the corner of a flashcard showing the number “3”. The robot paused. Its internal fan spun up. Then, with absolute, unshakeable confidence, it chirped: “That is a banana.”

I laughed. But then I stopped laughing. Because this is the exact same vulnerability that sent self-driving cars speeding into traffic a few years back. The difference? Now we’re handing the tech to six-year-olds. And honestly? The fact that these educational gadgets are so easily tricked might be the best thing to happen to STEM education in 2026.

The Rise of “Red Team” EdTech

We’ve spent the last five years teaching kids how to build AI. We drag-and-drop blocks in Scratch, we train simple models to recognize cats vs. dogs, and we clap when the machine gets it right. But we’ve been skipping the most critical part of engineering: what happens when it goes wrong.

The “happy path” is boring. Real engineering is about the edge cases. The failures.

Just last week, CircuitMess (a company I usually love for their soldering kits) announced a pivot that caught my eye. Their new lineup isn’t about building a chatbot. It’s about breaking one. They’re calling it “Adversarial Literacy,” which is a fancy way of saying “teaching kids how to hack AI so they understand why security matters.”

Hands-On: Tricking the Neural Net

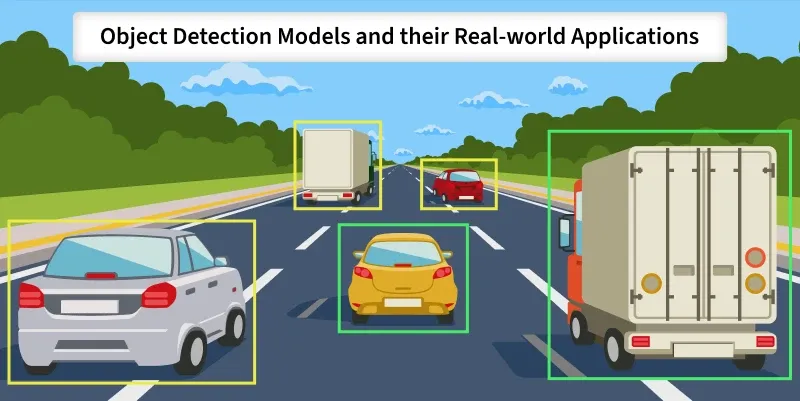

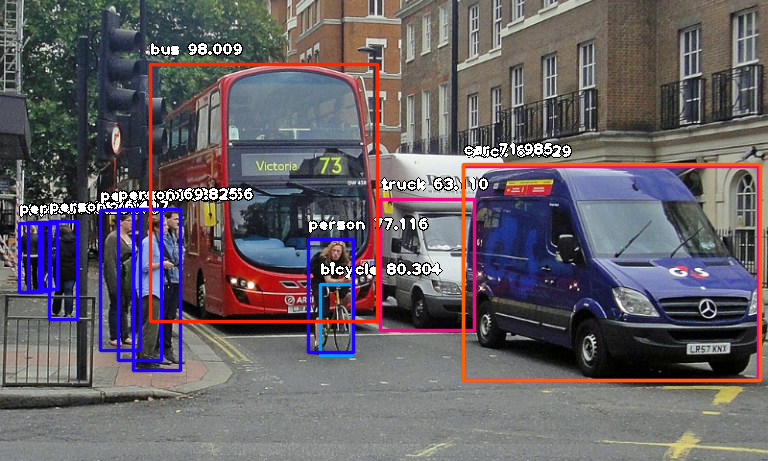

The setup was messy. I had the Pi hooked up to a camera module, running a standard MobileNetV2 image classifier. The Python script was simple enough—I was running it in a virtual environment with Python 3.12.1 and OpenCV 4.9.

The kit comes with “adversarial patches”—physical cards that look like modern art accidents. To a human, it’s just noise. But to the Convolutional Neural Network (CNN), it’s a super-stimulus that overrides everything else in the frame.

Here’s the kicker: I didn’t need to touch the code to break it. I just placed the card on the table.

- Control test: I showed the camera a coffee mug. Confidence: 94%. Label: “Coffee Mug”.

- Attack test: I placed the patch next to the mug. Confidence: 98%. Label: “Toaster”.

It was immediate. The bounding box flickered and then snapped onto the mug, misidentifying it completely. My terminal output went wild:

[INFO] Inference time: 24ms | Class ID: 859 (toaster) | Conf: 0.982

The “Black Box” Problem in Education

Most AI education tools are sealed shut. You feed data in, you get answers out. It’s a black box. But the news coming out of the BETT 2026 show in London suggests the industry is finally waking up. The shiny, perfect demos are out. “Explorable” AI is in.

I spoke to a developer from one of the major STEM brands (off the record, naturally). She told me that their support tickets spiked in Q4 2025 because kids were finding these exploits by accident. They’d wear a shirt with a specific pattern, and the robot would stop tracking their face. Instead of patching it quietly, the company decided to lean in. Their next firmware update, version 2.4.0, actually includes a “Debug Mode” that visualizes what the camera is focusing on.

Why I’m ditching the “Perfect” Kits

I have a shelf full of polished, friendly robots that work perfectly. They are gathering dust. The kit that’s currently wired up on my desk looks like a bomb went off in a RadioShack. And it crashes if I look at it wrong. But it’s teaching me more about the reality of Artificial Intelligence than any of the polished toys ever did.

If we want the next generation of engineers to build safe autonomous systems—cars that don’t speed up when they see a sticker on a stop sign, or medical AI that doesn’t misdiagnose because of image noise—we need to stop protecting them from the glitches. We need to hand them the stickers.

So, if you’re looking for a gadget to get your kid interested in tech this year, skip the one that promises to “teach them AI.” Look for the one that dares them to break it. The future of tech isn’t about building perfect systems. It’s about building systems that survive an imperfect world.

And if you manage to convince your robot that the family dog is a sports car? Send me the logs. I’m still trying to figure that one out.