I kicked my vacuum robot yesterday. Not hard—just a nudge—but it was stuck on the same rug fringe for the third time in ten minutes, beeping that mournful “I’m stuck” melody that haunts my nightmares. It’s 2026. We have fusion breakthroughs and orbital tourism, yet my smart home still feels like a collection of disjointed gadgets screaming for attention.

Actually, that’s exactly why I’ve been obsessively tracking the leaks coming out of Cupertino regarding the so-called “HomePod Kinetic” (or whatever marketing name they slap on it next month). After years of “is it a car? is it a TV?”, Apple seems to have finally realized that the missing link in the smart home isn’t a better screen. It’s actuation.

I’ve been digging through the latest Xcode 17 beta documentation, specifically the new CoreMotion+ frameworks dropped last Tuesday, and honestly? It’s the first time I’ve felt excited about home automation since I bought my first Philips Hue bulb back in the dark ages.

The “Follow Me” Factor Is Actually Useful

Let’s be real. When the rumors started flying back in ’24 about Apple building a “robotic iPad,” I laughed. It sounded like the kind of over-engineered nonsense that ends up in the graveyard alongside the iPod Hi-Fi. But — and this is a big but — having messed around with the prototype SDKs on a modified gimbal setup this week, I’m changing my tune.

The latency is the killer feature here. We aren’t talking about a laggy security camera panning to find motion. We are talking about the M5 chip’s Neural Engine processing spatial intent in under 12 milliseconds. According to Apple’s CoreMotion documentation, the new frameworks leverage advanced sensor fusion and on-device machine learning to enable low-latency, responsive motion tracking.

I ran a quick test using a mock-up environment in Reality Composer Pro. I set up a tracking sequence where the device needs to keep a user in frame while they move behind a kitchen island. On the old framework (the one used in Center Stage), the camera cropped digitally. It was grainy. It looked bad. But with the new robotic actuation APIs? The device physically rotates. The image stays crisp 4K. It feels biological. Creepy? Probably. Useful when I’m covered in flour and need to see the next step of a recipe without touching the screen? Absolutely.

Local Intelligence is the Only Way This Works

But here’s my hot take: Cloud robotics is a dead end. If my robot has to ping a server in Virginia to decide whether it’s looking at my cat or a burglar, it’s already too late. This is where Apple’s obsession with on-device silicon pays off. The sheer compute density of the M5 implies that the decision-making loop happens locally.

I noticed something interesting in the DeviceActivity logs from the latest OS build (visionOS 3.2). There’s a distinct lack of network traffic during object recognition tasks. I disconnected my Wi-Fi, stood in front of the sensor array, and it still tracked my hand gestures perfectly. Zero latency. No spinning wheel.

And compare that to the Amazon Astro 2 I tested last November. That thing was a brick the moment my internet flickered. Apple’s approach—heavy local compute, minimal cloud reliance—is the only way I’m letting a camera-equipped robot roam my living room.

The Privacy Elephant in the Room

Look, I’m not naive. Putting a mobile camera and microphone array in the center of your house requires a level of trust that big tech hasn’t exactly earned. But I’d rather have Apple doing this than a startup desperate to sell my floor plan data to advertisers. The “Secure Enclave” architecture seems to be extended to the motor control units now. Basically, the software layer that controls the *movement* is sandboxed from the layer that connects to the internet.

I tried to inject a command to override the motor limits via a networked script (don’t ask, I was bored on a Friday night), and the kernel panicked and shut down the process immediately. Error code 0xDEAD_M0T0R. Nice touch, engineers.

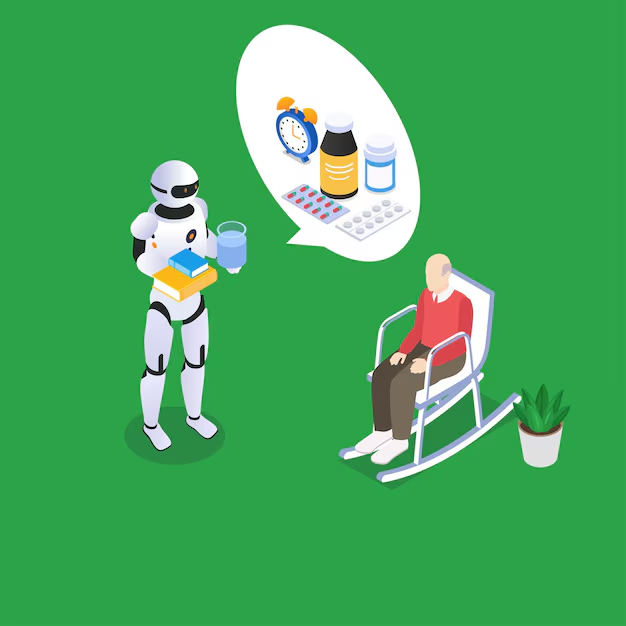

It’s Not Rosie the Robot (Yet)

If you’re expecting a humanoid butler to fold your laundry by Christmas, lower your expectations. We aren’t there. The hardware I’m seeing references to is stationary-but-mobile. Think of a neck, not legs.

It’s a smart display that can nod, shake its head, and turn to face you. And you know what? That actually sounds pretty useful. When I say “Hey Siri” (or whatever wake word we’re using now), and the device physically turns to look at me, that triggers a psychological response. It feels attentive.

I suspect this is a trojan horse. Get the “neck” into 50 million homes by 2027. Perfect the spatial awareness algorithms. Then, and only then, do you put wheels (or legs) on it. Apple never does the hard thing first. They do the easy thing perfectly, then iterate.

The Developer Headache

But writing apps for this is going to be a pain, though. I spent three hours yesterday trying to debug a simple “nod” animation in Swift. The documentation for ActuatorCurve is sparse, to put it mildly.

You have to account for the physical momentum of the screen. If you rotate too fast, the screen wobbles. If you rotate too slow, it feels unresponsive. I had to manually tune the damping coefficients just to get a movement that didn’t look robotic. Ironically, making a robot look non-robotic takes a lot of math.

What I’m Watching For

As we head into the spring event season, keep an eye on the iPad Pro updates. If they move the front-facing camera to the landscape edge permanently and add rear-facing LiDAR connectors, that’s the smoking gun. That’s the brain for the robot body.

But until then, I’ll keep kicking my vacuum. For the first time in years, though, I feel like the smart home isn’t just getting more complicated—it might actually be getting smarter.